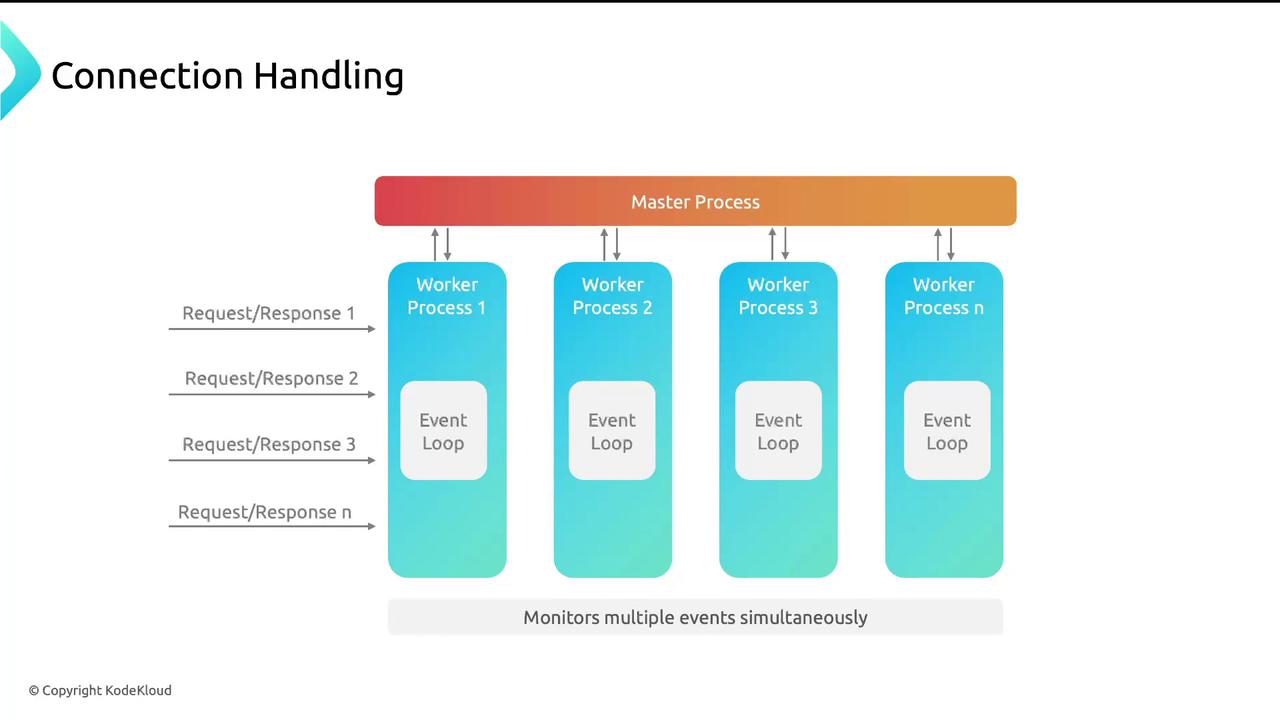

Nginx Master and Worker Processes

Nginx follows a master–worker architecture:- The master process reads configuration files, manages worker lifecycles, and monitors for reloads.

- Each worker process runs an event loop, independently handling client connections and events.

Configuring Workers

Place the following in your nginx.conf:worker_processes auto;auto-detects CPU cores.worker_connections 512;sets max concurrent connections per worker (default: 512).

Setting

worker_connections too high may exhaust file descriptors. Monitor with ulimit -n and adjust your OS limits accordingly.

- Workers: 4

- Connections: 4 × 512 = 2048

worker_connections:

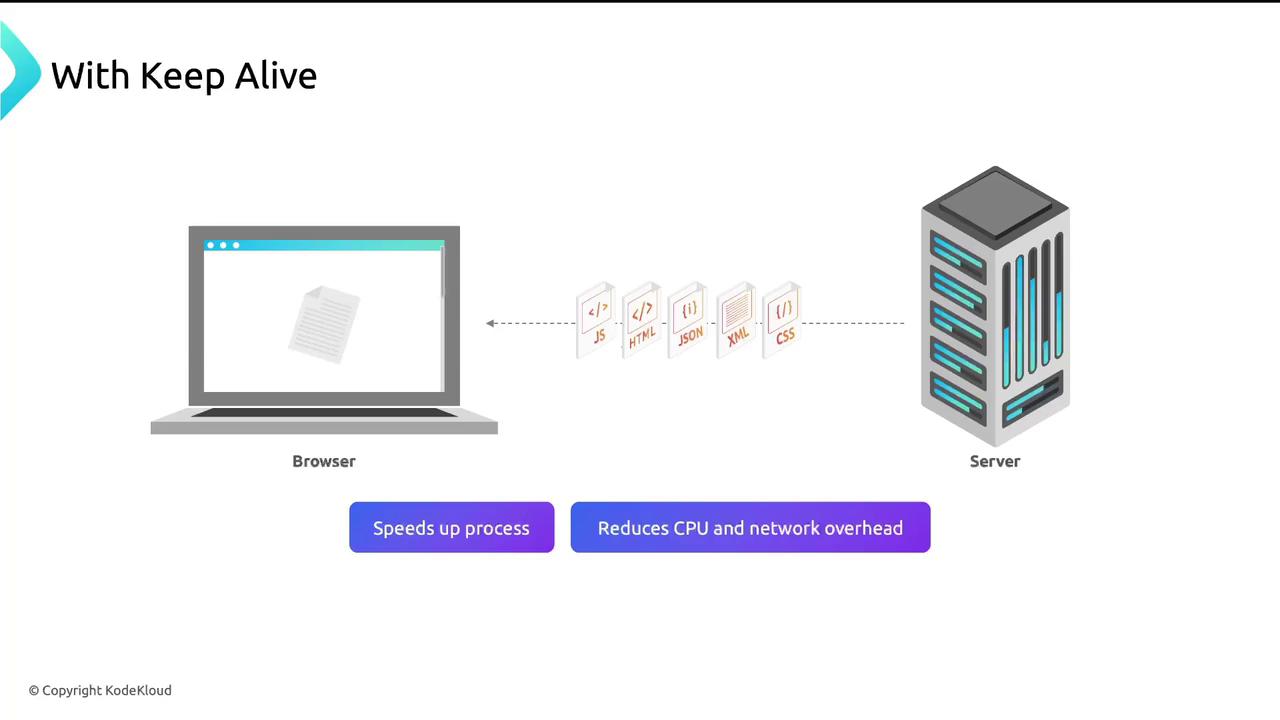

Keep-Alive Connections

Persistent (keep-alive) connections reuse a single TCP socket for multiple HTTP requests, reducing handshake overhead:

http block:

Upstream Keep-Alive

If Nginx proxies to backend servers, maintain idle connections upstream:server block:

proxy_http_version 1.1;ensures HTTP/1.1 persistent connections.proxy_set_header Connection "";avoids sendingConnection: close.

Persistent upstream connections can significantly reduce backend latency. Always test under load!

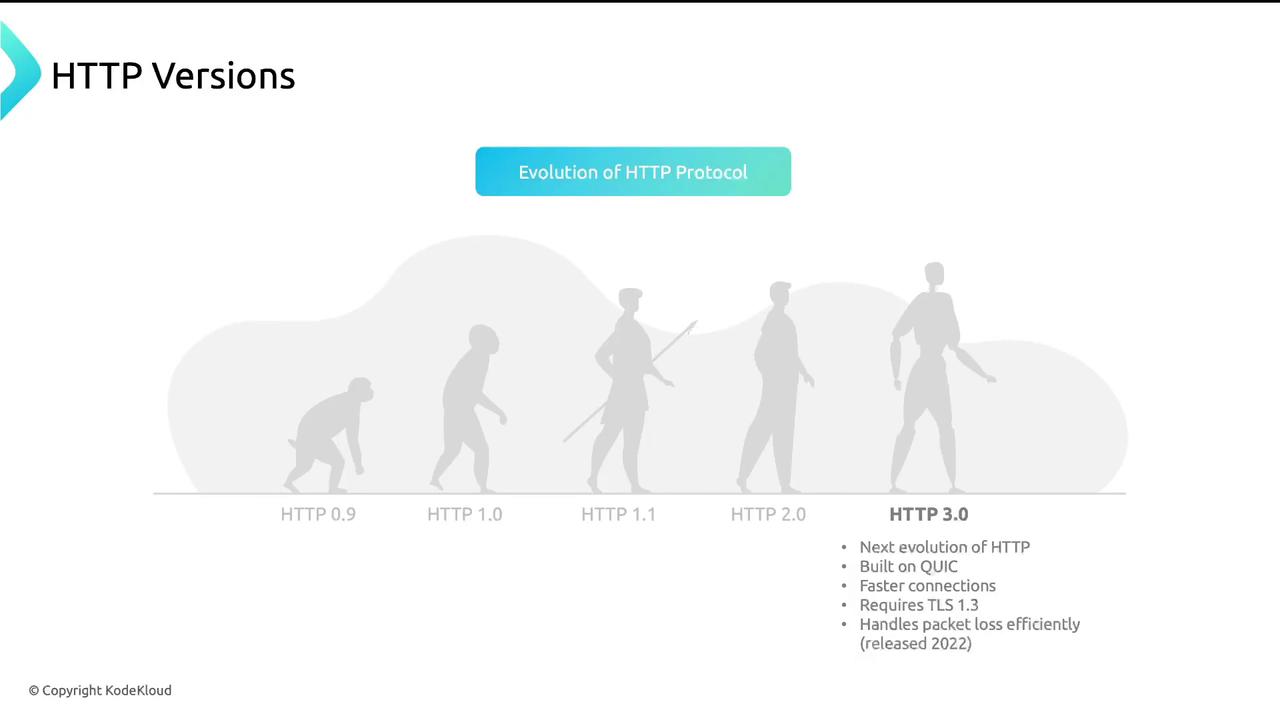

Evolution of HTTP

HTTP has evolved to improve performance, security, and flexibility.| HTTP Version | Key Features | Release |

|---|---|---|

| HTTP/0.9 | Simple GET, no headers or status codes | 1991 |

| HTTP/1.0 | Added status codes (200, 404), GET/POST methods | 1996 |

| HTTP/1.1 | Persistent connections, chunked transfer, caching, cookies, compression, pipelining | 1997 |

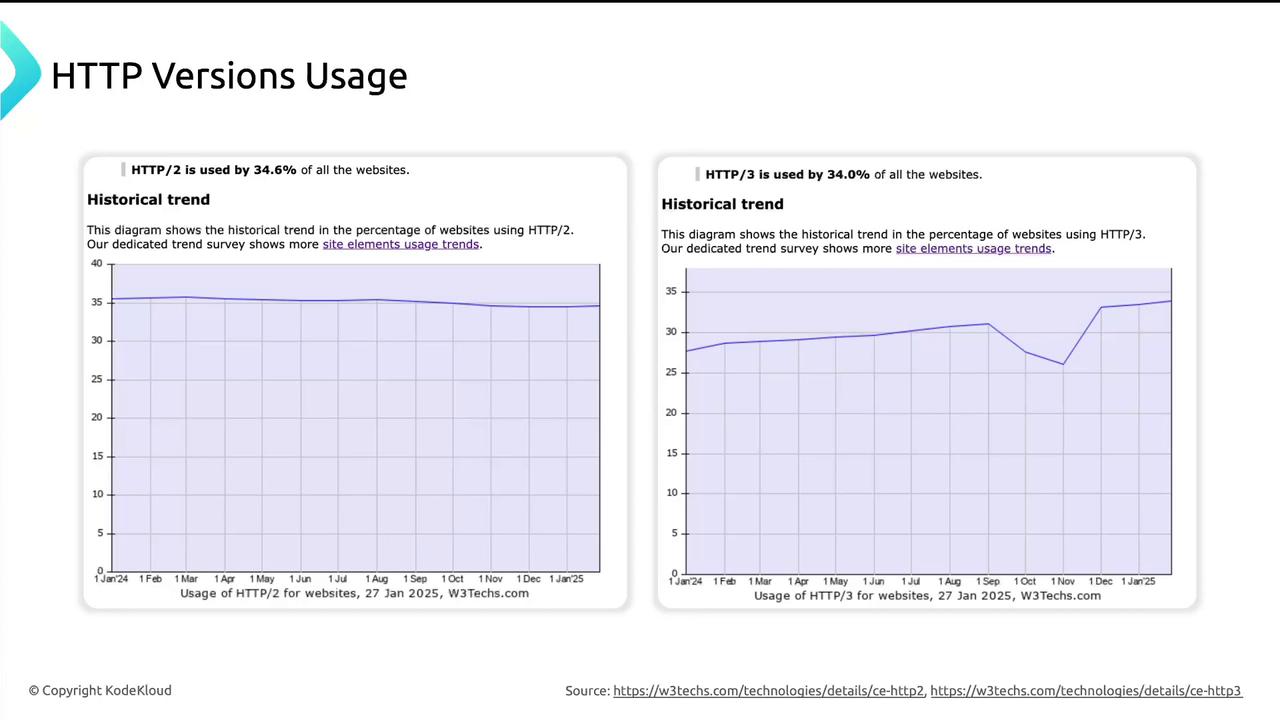

| HTTP/2 | Multiplexing, header compression (HPACK), binary framing | 2015 |

| HTTP/3 | QUIC over UDP, 0-RTT, built-in TLS 1.3, connection migration | 2020 |

- HTTP/2: Multiplex streams over one TCP connection; header compression.

- HTTP/3: Runs on QUIC/UDP, reduces latency, supports connection migration.

TCP vs. UDP

| Protocol | Reliability | Use Cases | Transport Model |

|---|---|---|---|

| TCP | Ordered, error-checked | Web, email, file transfer | Connection-oriented |

| UDP | Unordered, best-effort | Gaming, streaming, VoIP | Connectionless |

Optimizing File Transfers with sendfile

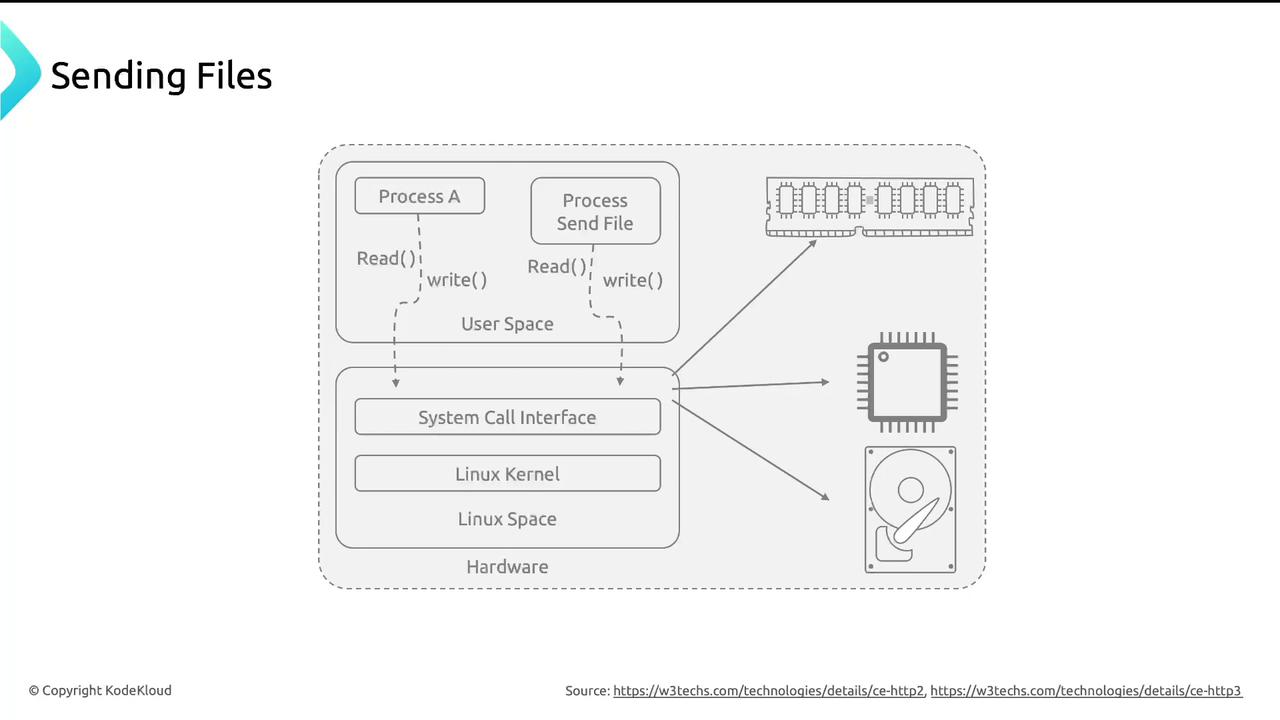

Default file transfers read data into user space, then write to the network. This double-buffering adds CPU and memory overhead.

On some platforms (e.g., older BSD variants),

sendfile may behave differently. Test before deploying to production.Reducing TCP Overhead with tcp_nopush

Small TCP packets increase protocol overhead.tcp_nopush delays sending until a full packet is ready:

By tuning these core options—master/worker processes, keep-alive, sendfile, and TCP flags—you’ll boost Nginx performance and resource efficiency.