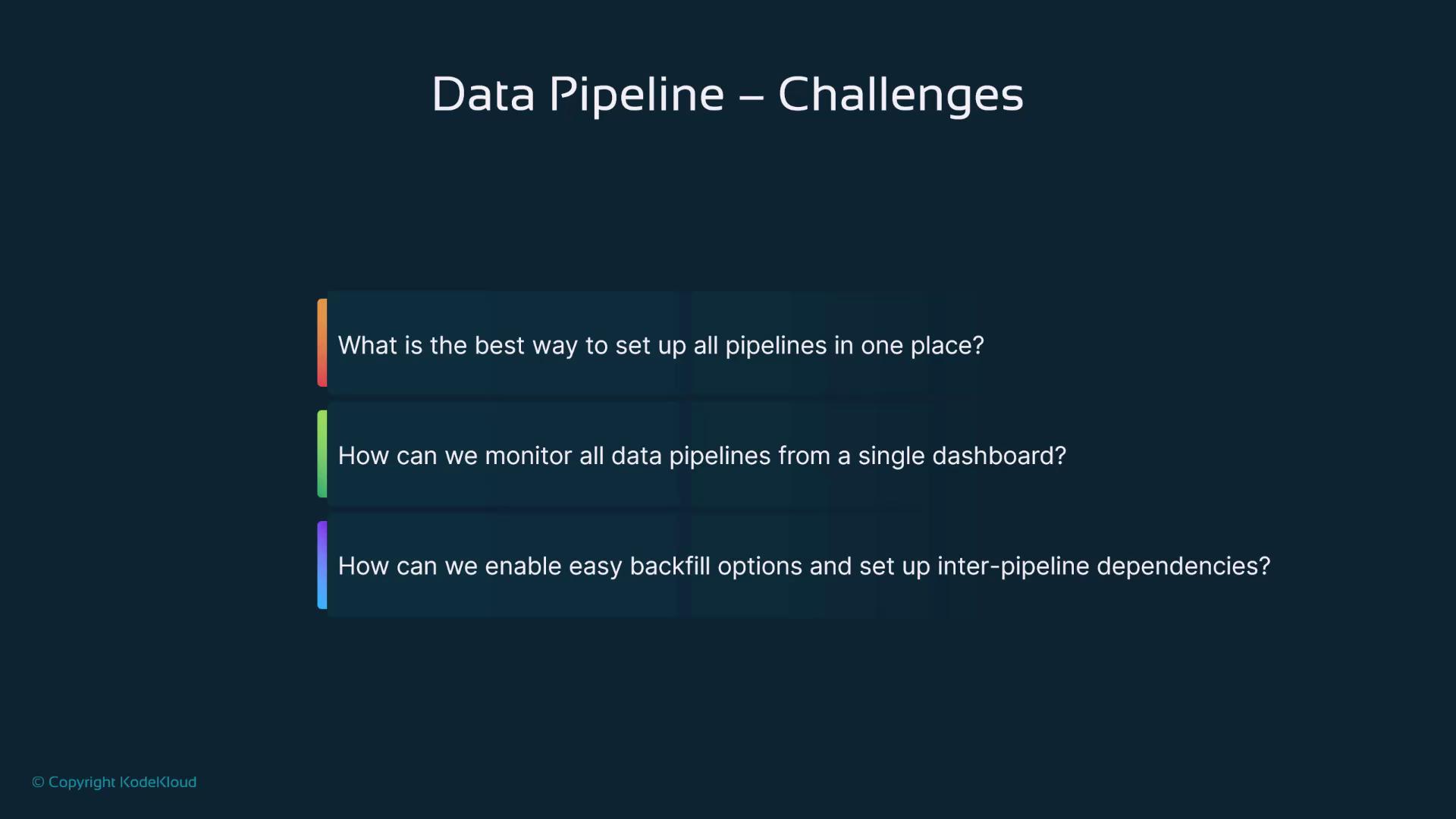

The Need for Advanced Orchestration

Modern organizations often run hundreds or even thousands of data pipelines, each sourcing insights from various aspects of the business. Relying on simple scripts or cron jobs to manage these pipelines is both inefficient and error-prone. Without a robust data pipeline system, you may encounter several challenges:- How do you centrally set up and manage all pipelines?

- How can you monitor them from a unified dashboard or UI?

- How do you manage inter-pipeline dependencies and easily backfill data when needed?

Using specialized orchestration tools like Apache Airflow and Prefect alleviates these challenges by centralizing pipeline management into one user-friendly interface.

Key Benefits of Orchestration Tools

Orchestration tools simplify pipeline management in several key areas:-

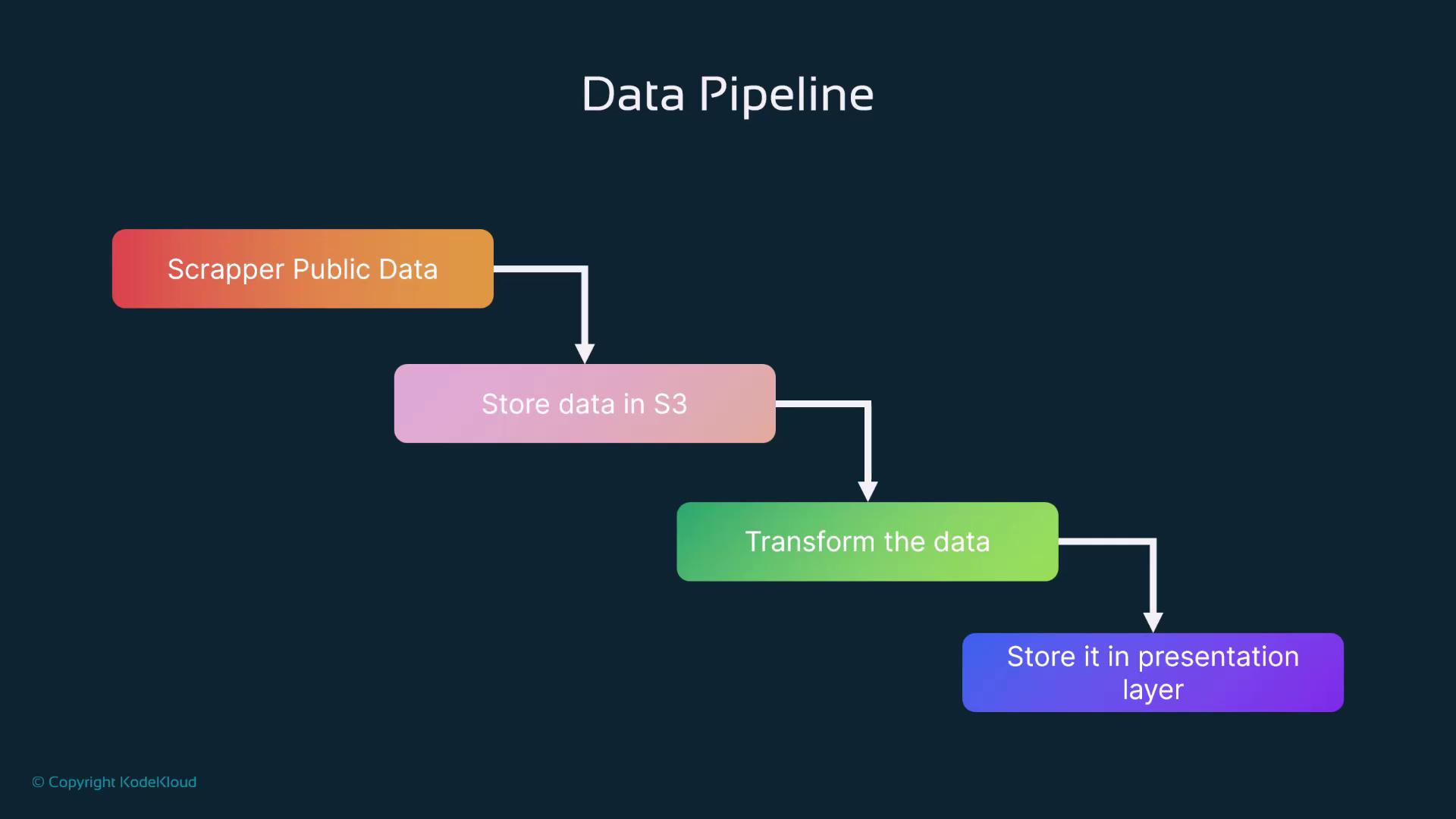

Automation and Scheduling:

Tasks such as data extraction and transformation can be fully automated to run at scheduled times. For example, a retail company might trigger competitor product data scraping at midnight, ensuring fresh insights every morning. -

Robust Error Handling and Retries:

In real-world applications, errors are inevitable. These tools come with built-in error handling and automatic retry features. If a task fails, it will automatically be retried based on predetermined settings, reducing the need for manual intervention. -

Scalable and Parallel Processing:

As data volumes grow, the ability to scale horizontally becomes critical. Orchestration tools enable adding more workers to process high volumes efficiently, which is especially important in sectors like finance where massive transactional data is common. -

Monitoring and Logging:

Detailed logging and continuous monitoring provide clear insights into pipeline performance. For instance, healthcare providers can monitor patient data flows to ensure compliance and operational efficiency. -

Seamless Integration:

Platforms like Airflow and Prefect integrate with various systems (AWS, GCP, custom APIs, databases, and more) to build comprehensive workflows connecting multiple services.

Spotlight on Airflow and Prefect

Let’s explore two of the most popular orchestration tools in data pipelines: Airflow and Prefect. Both platforms excel at orchestrating complex workflows, managing task scheduling, and providing real-time monitoring.-

Apache Airflow:

Airflow offers a rich user interface that visualizes task dependencies, making it easier to manage the sequential steps in a pipeline. Its large community and extensive documentation further support its robust usage in enterprise settings. -

Prefect:

Prefect emphasizes modern APIs and simplified workflow design. It supports time-based scheduling and event triggers, ensuring that data extraction, transformation, and loading occur in the correct sequence.

Both Airflow and Prefect provide real-time dashboards and detailed logging. This is essential for promptly identifying issues—if a data source experiences downtime, monitoring alerts can inform your team immediately.