Why Redis List Scaling?

Traditional CPU- or memory-based scaling won’t address a growing Redis queue. By using KEDA’s Redis List scaler, you can dynamically adjust pod replicas based on the number of pending items:- Scale out when the backlog grows.

- Scale in when work completes.

- Avoid overprovisioning during low traffic.

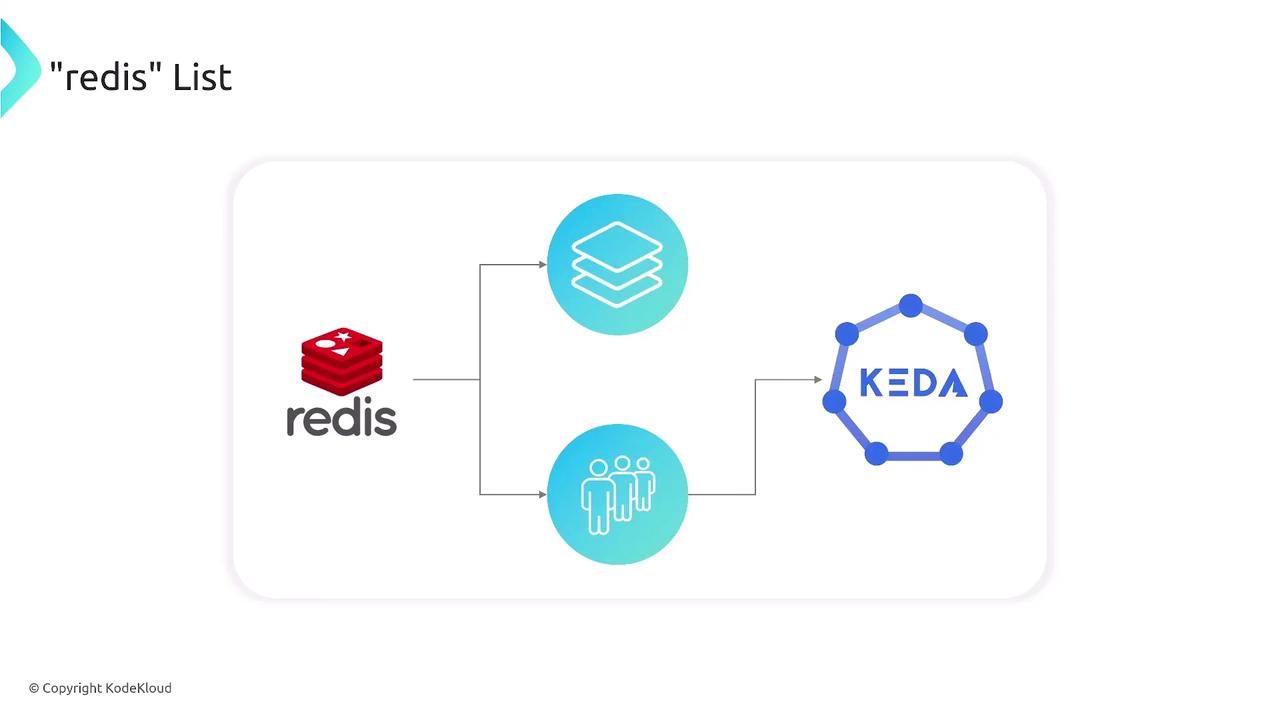

Architecture and Flow

Below is a high-level diagram showing how Redis, KEDA, and your worker deployment interact:

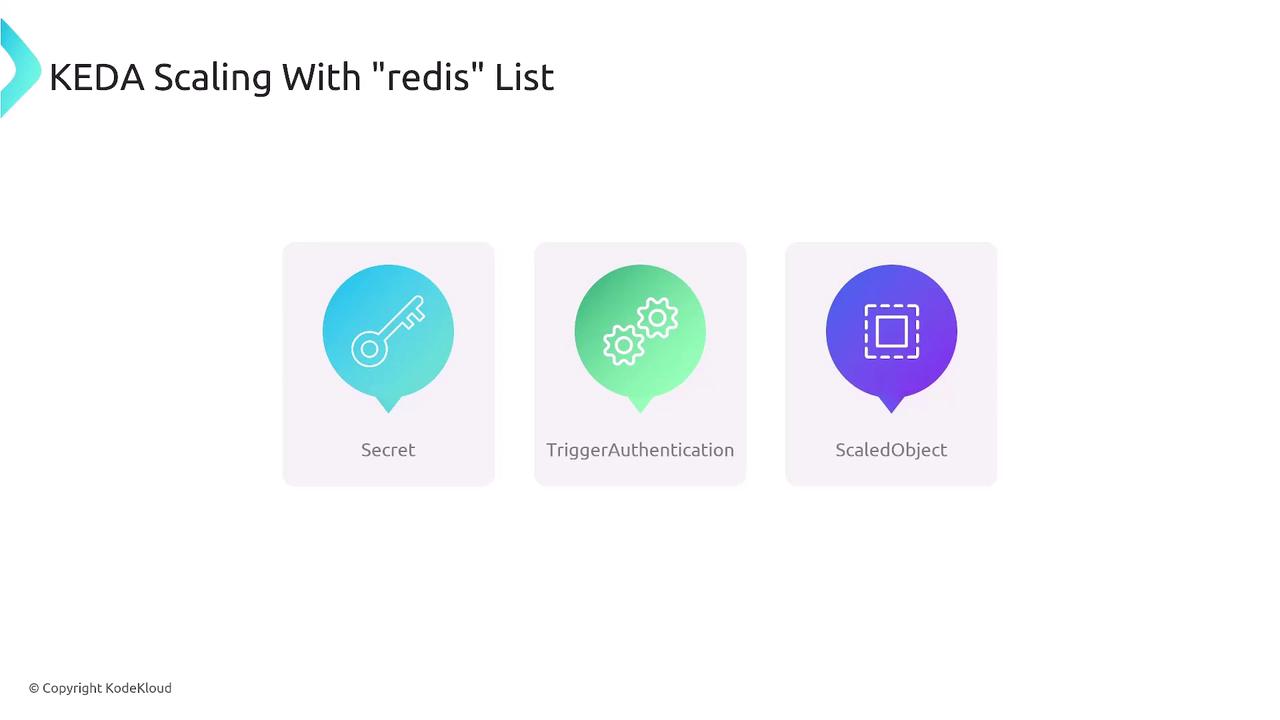

KEDA Resources Overview

| Resource | Purpose | Documentation |

|---|---|---|

| Secret | Stores the Redis password (Base64-encoded) | Kubernetes Secrets |

| TriggerAuthentication | References the secret to authenticate KEDA to Redis | KEDA Auth Concepts |

| ScaledObject | Defines how to scale your worker based on the list | KEDA ScaledObject |

1. Create the Redis Password Secret

Encode your Redis password in Base64 and store it in a Kubernetes Secret:Ensure you encode the plaintext password with

For example:

base64.For example:

echo -n 'yourRedisPassword' | base642. Define TriggerAuthentication

Create aTriggerAuthentication so that KEDA can retrieve the password from the secret:

3. Configure the ScaledObject

Finally, define theScaledObject to tie everything together:

Review your HPA policies carefully. Aggressive scaling (100% every 15 s) can lead to resource spikes. Adjust thresholds to match your cluster capacity.

myList backlog in Redis and automatically scale the nginx worker pods—scaling out under heavy load and scaling in as the queue drains.