Always pin your LangChain version to ensure compatibility with course notebooks and examples.

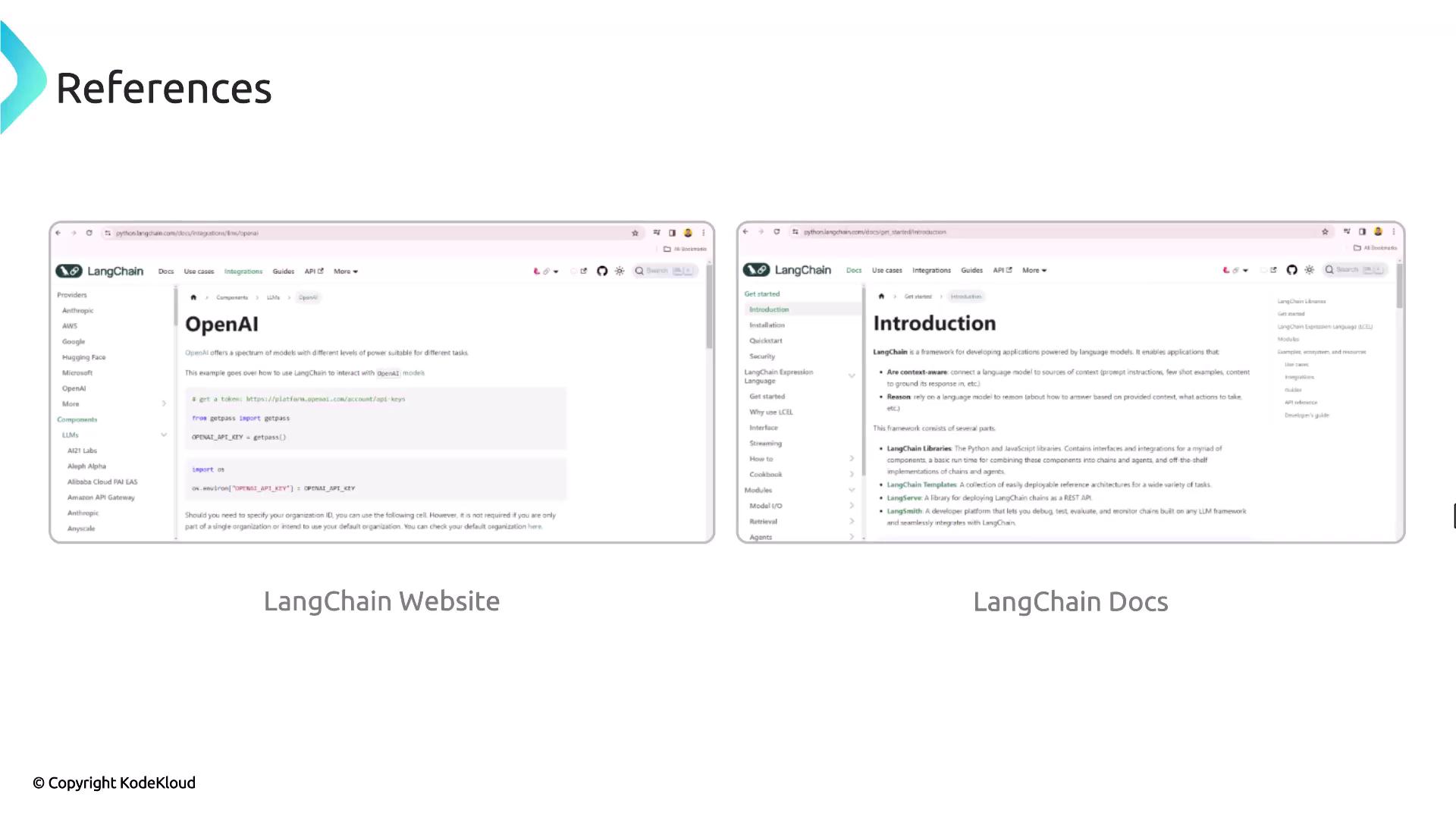

Official Website and Documentation

Start your LangChain journey by browsing the official site and documentation:- Website: https://langchain.com

- Docs: https://langchain.readthedocs.io

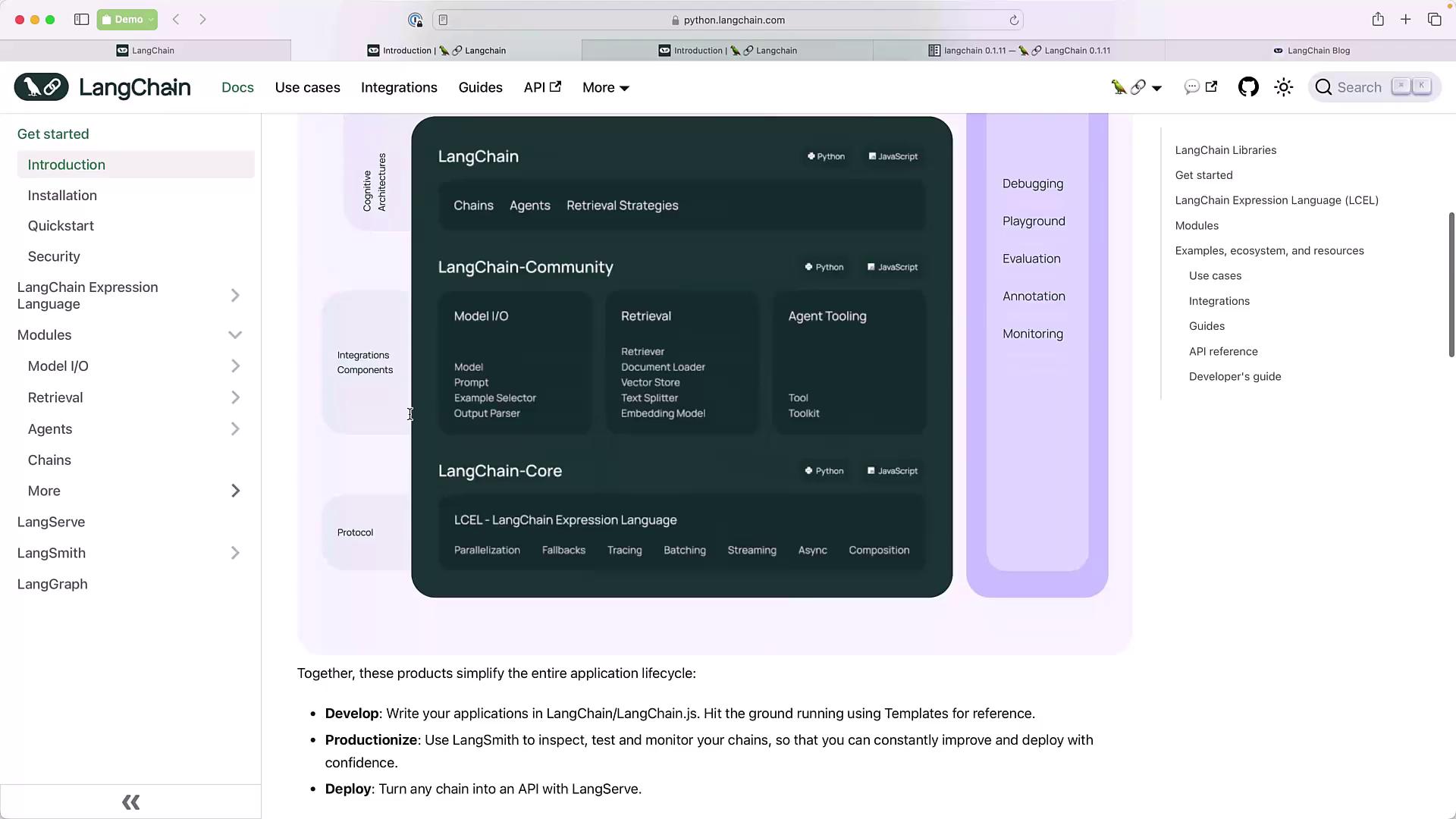

Documentation Overview

LangChain’s documentation covers everything from basic concepts to advanced modules:

| Module | Description |

|---|---|

| Model I/O | Formatting, predicting, and parsing LLM requests |

| Prompt Engineering | Building and testing templates |

| Chat Models | Conversational interfaces |

| Output Parsers | Structured data extraction |

| Retrieval Agents | Querying external knowledge |

| Chains | Orchestrating multi-step processes |

| Memory | Context management between interactions |

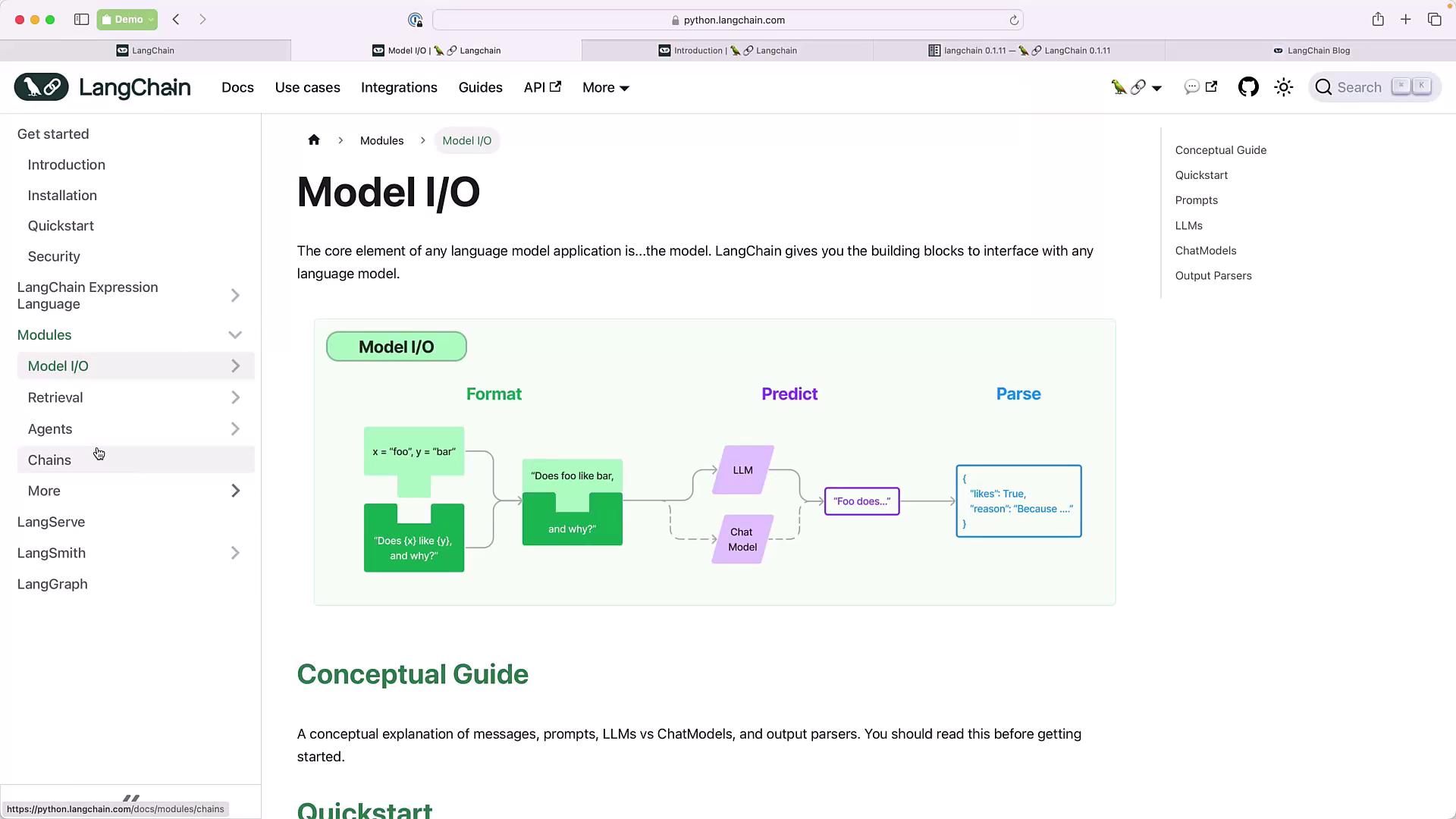

Core Modules and Model I/O

LangChain’s core sections include Model I/O, prompt engineering, chat models, output parsers, retrieval agents, chains, and memory. Here’s a representative flowchart for Model I/O:

New modules such as LangServ, LangSmith, and LangGraph are under active development and not covered in this guide. Apply for early access if you’d like to experiment.

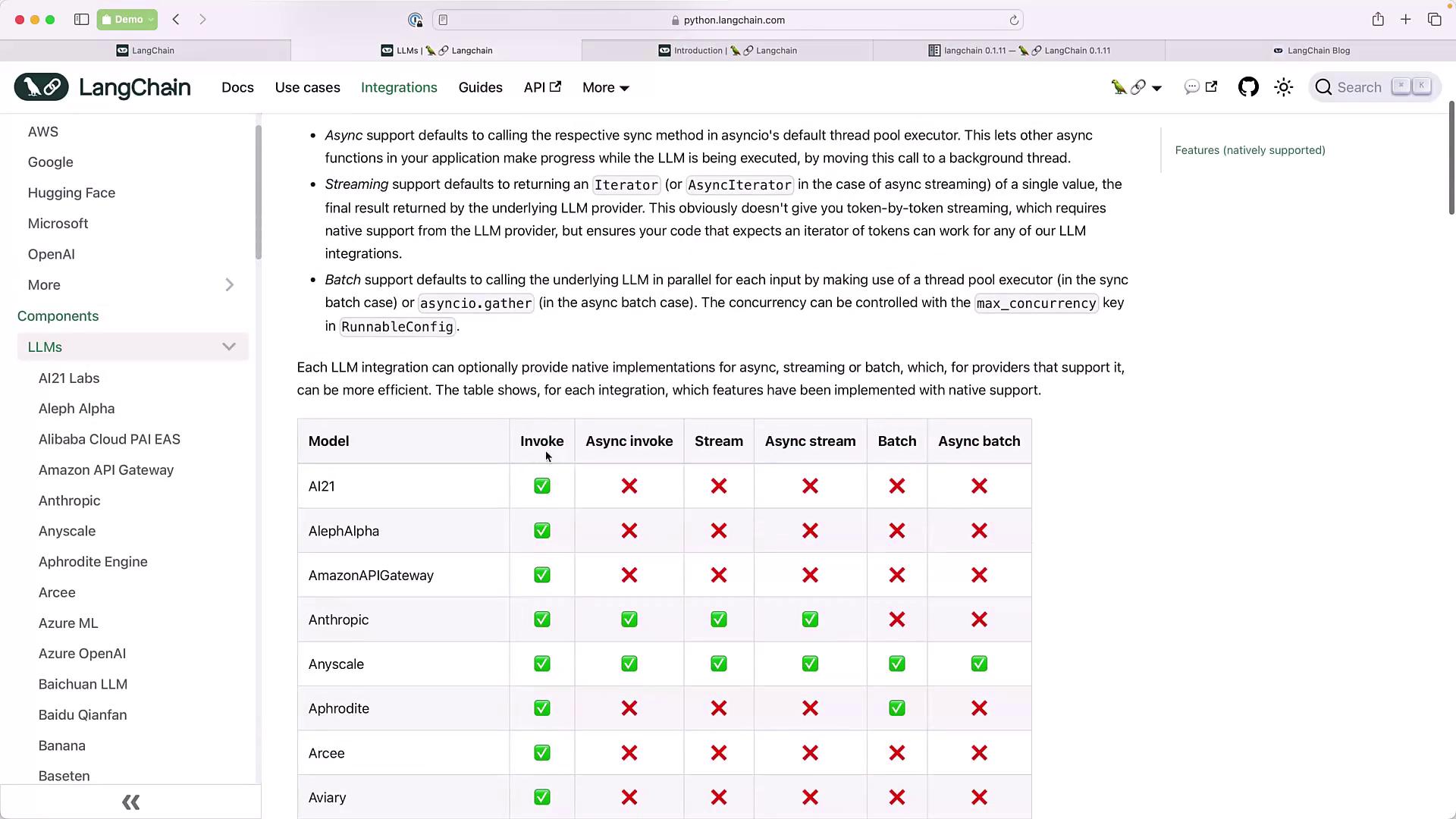

Third-Party Integrations

LangChain integrates with dozens of LLM providers, embedding models, and vector stores. You can filter integrations based on support forinvoke, async, streaming, batch, and more:

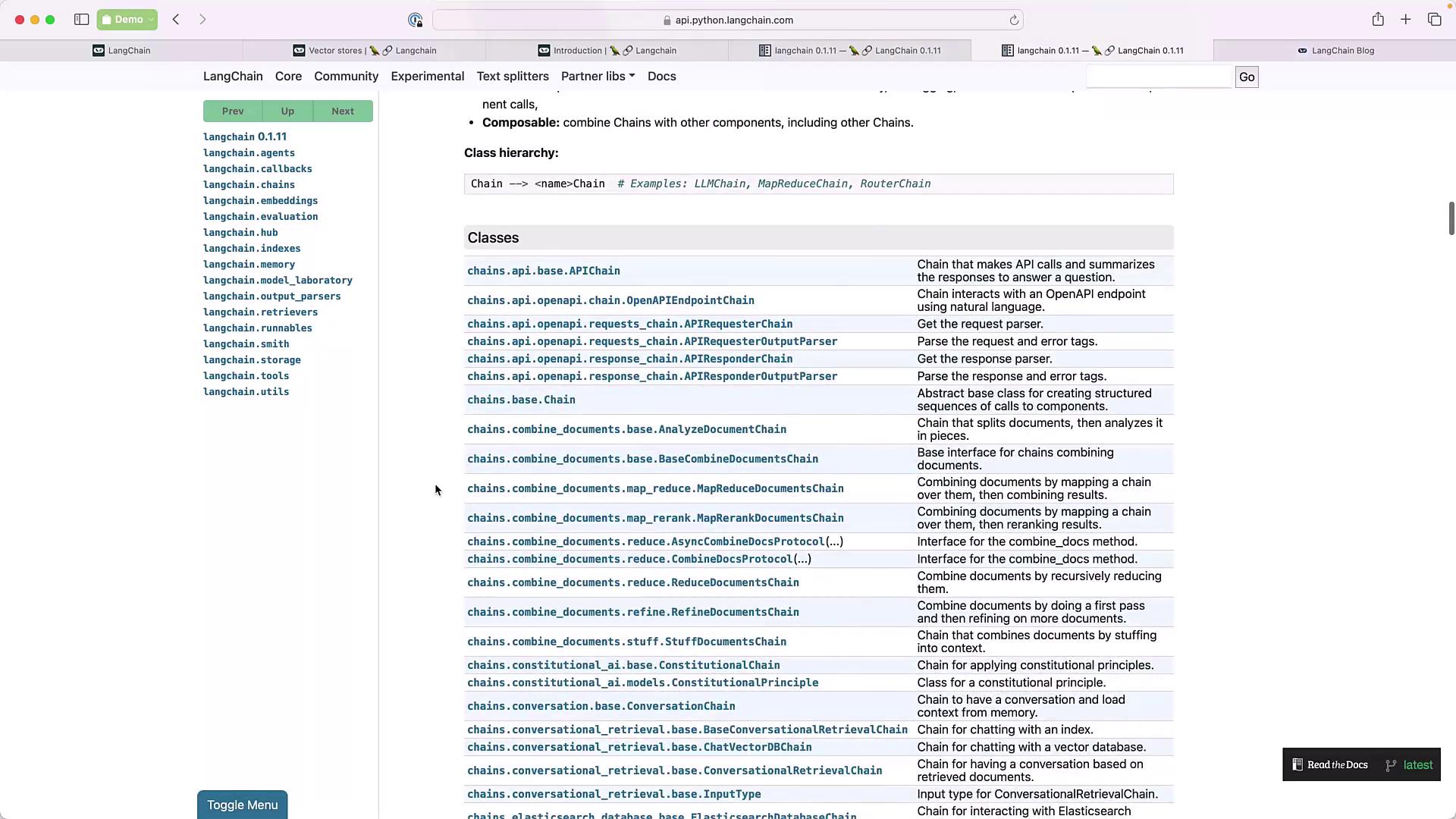

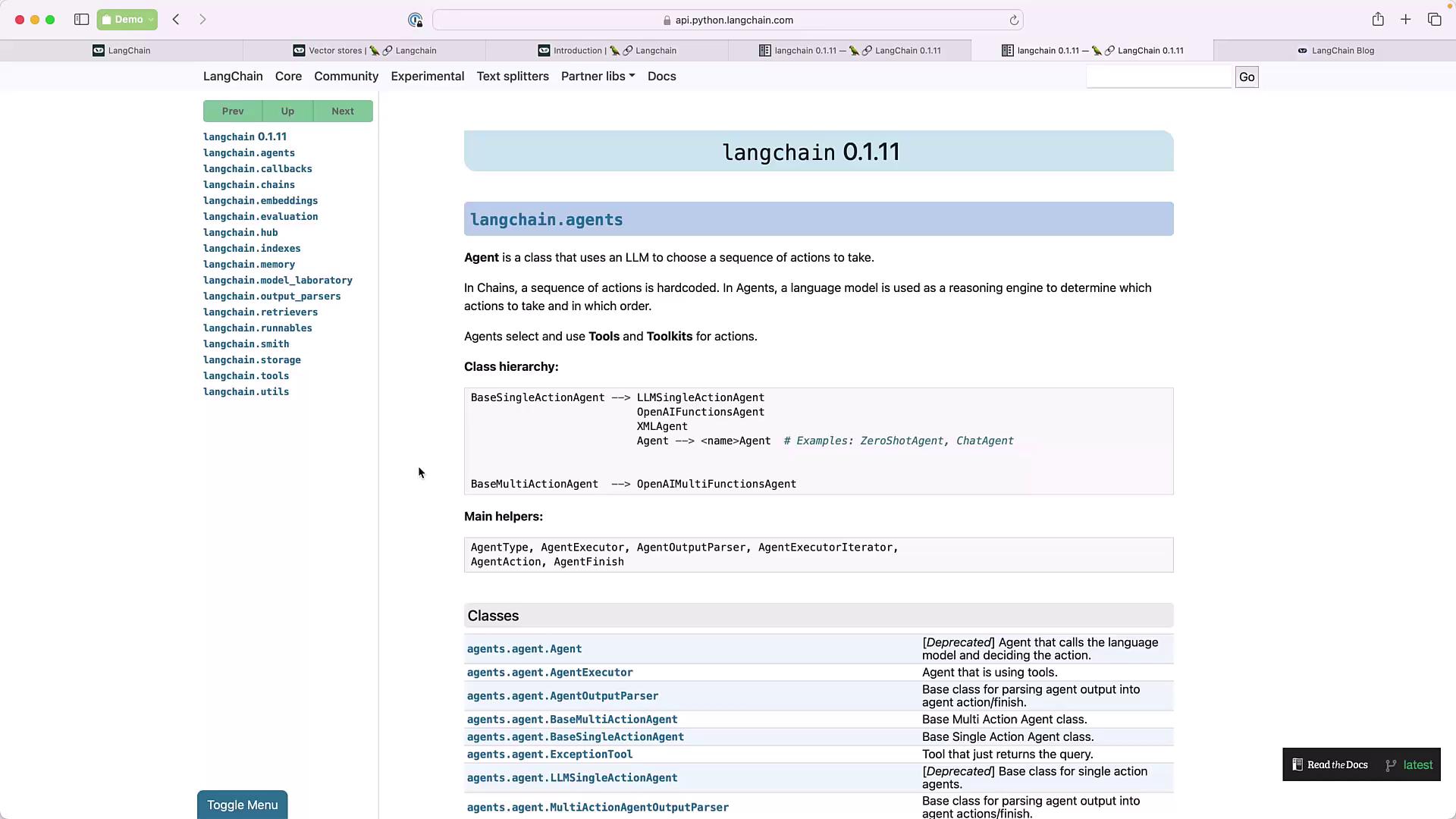

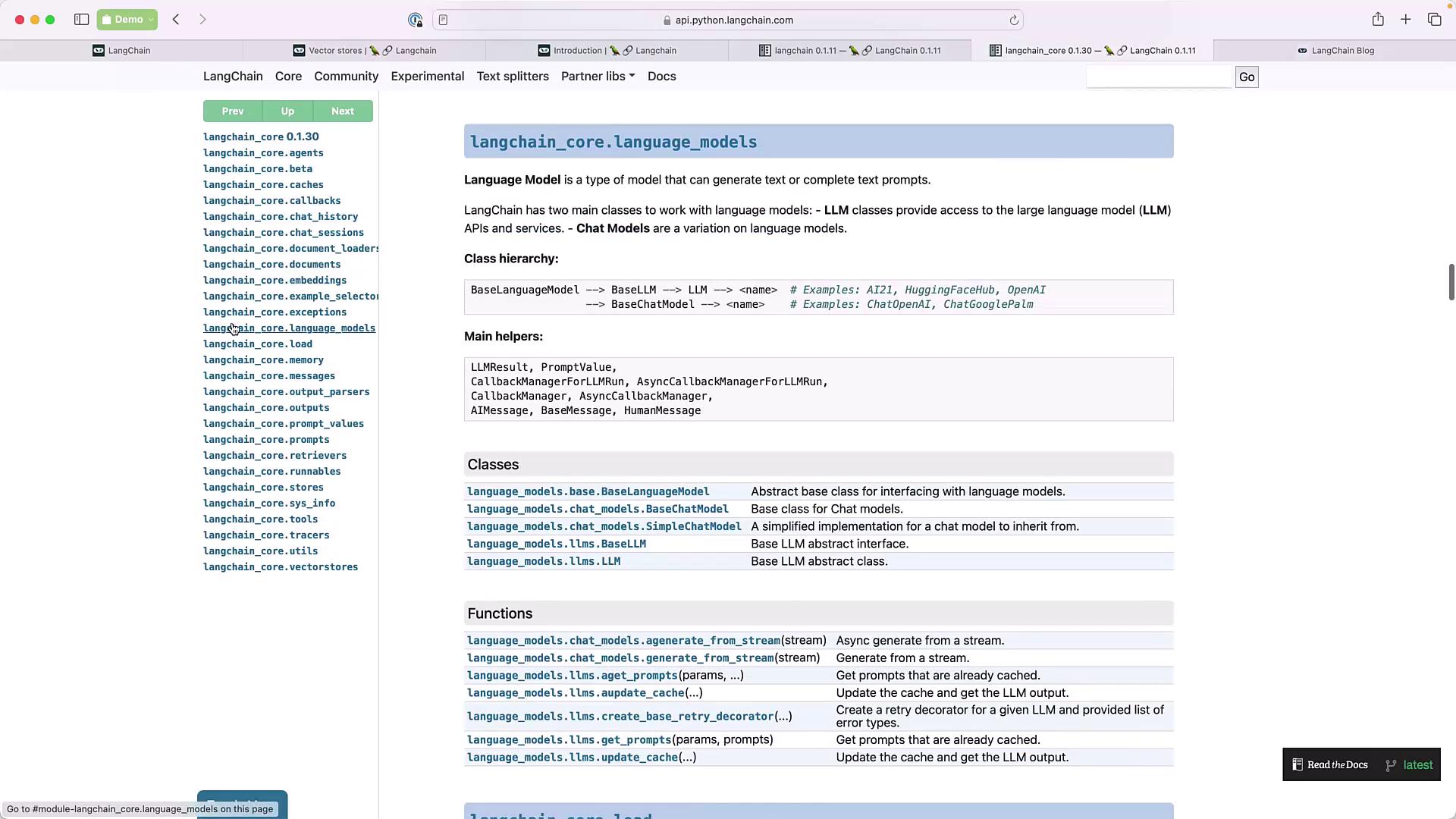

Python SDK and API Reference

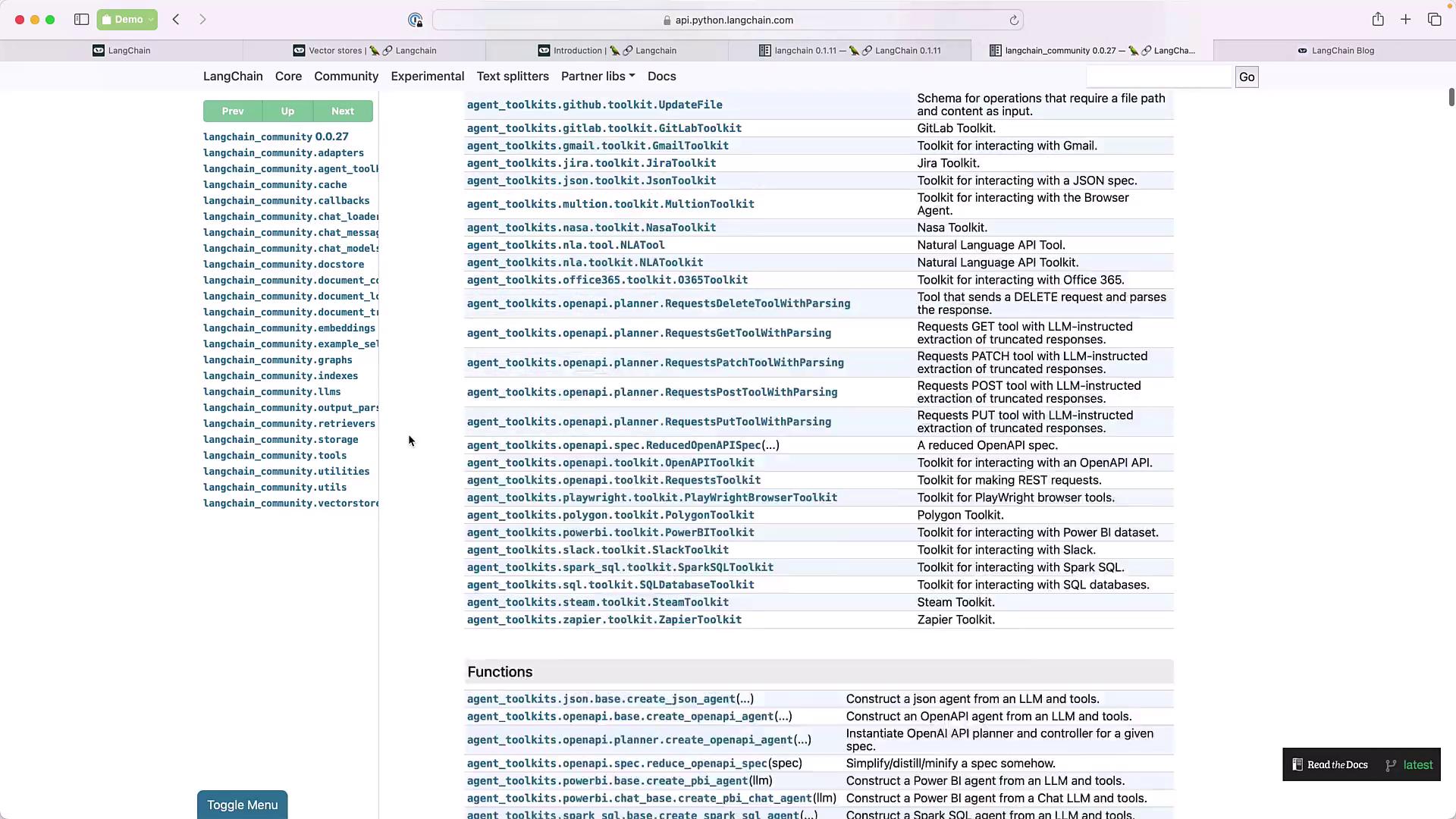

Every LangChain component is documented under the API reference. You’ll find details for agents, language models, chains, toolkits, and community modules.Agents

Language Models

Agent Toolkits

A variety of toolkits help you build and customize agents:

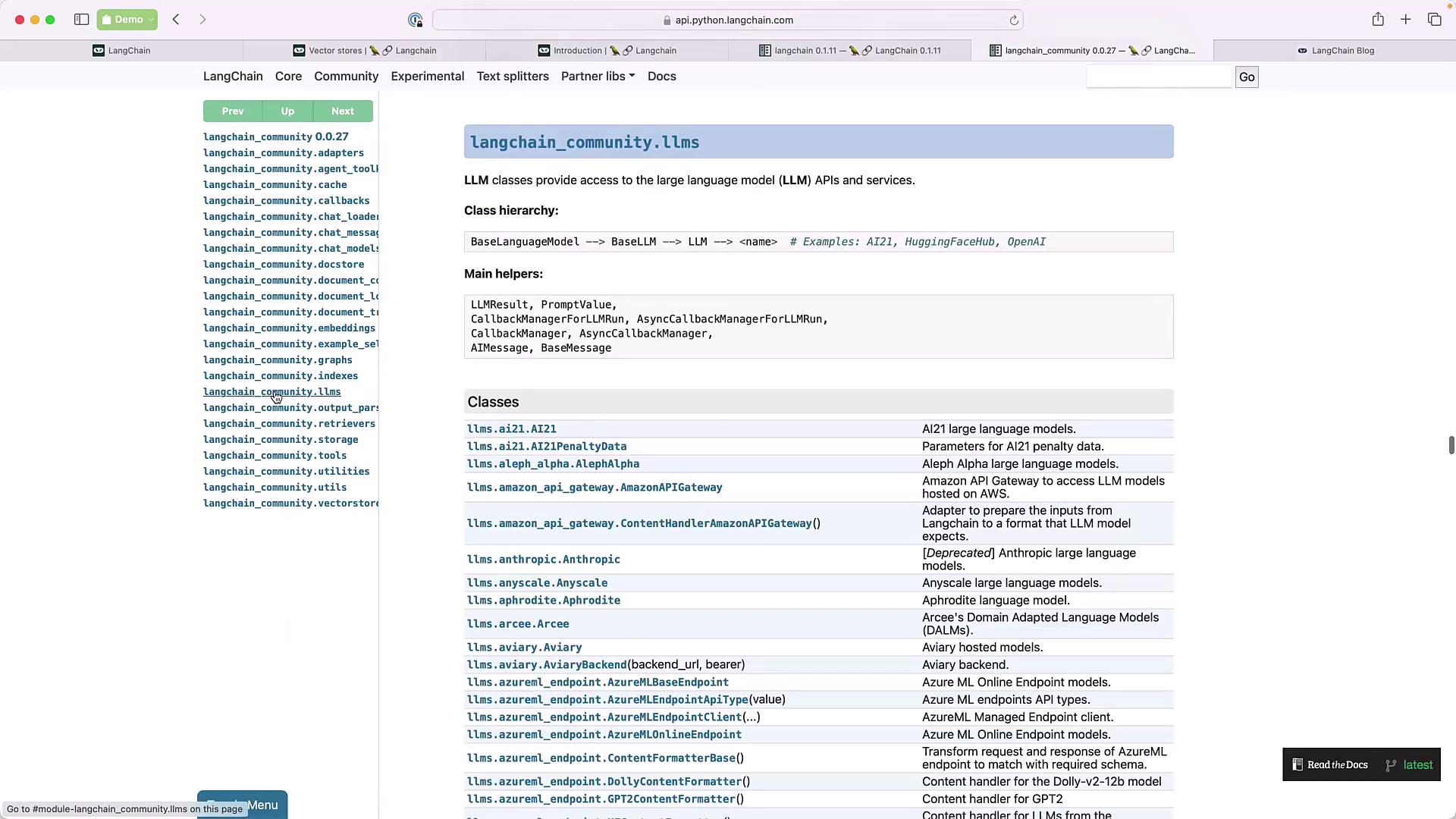

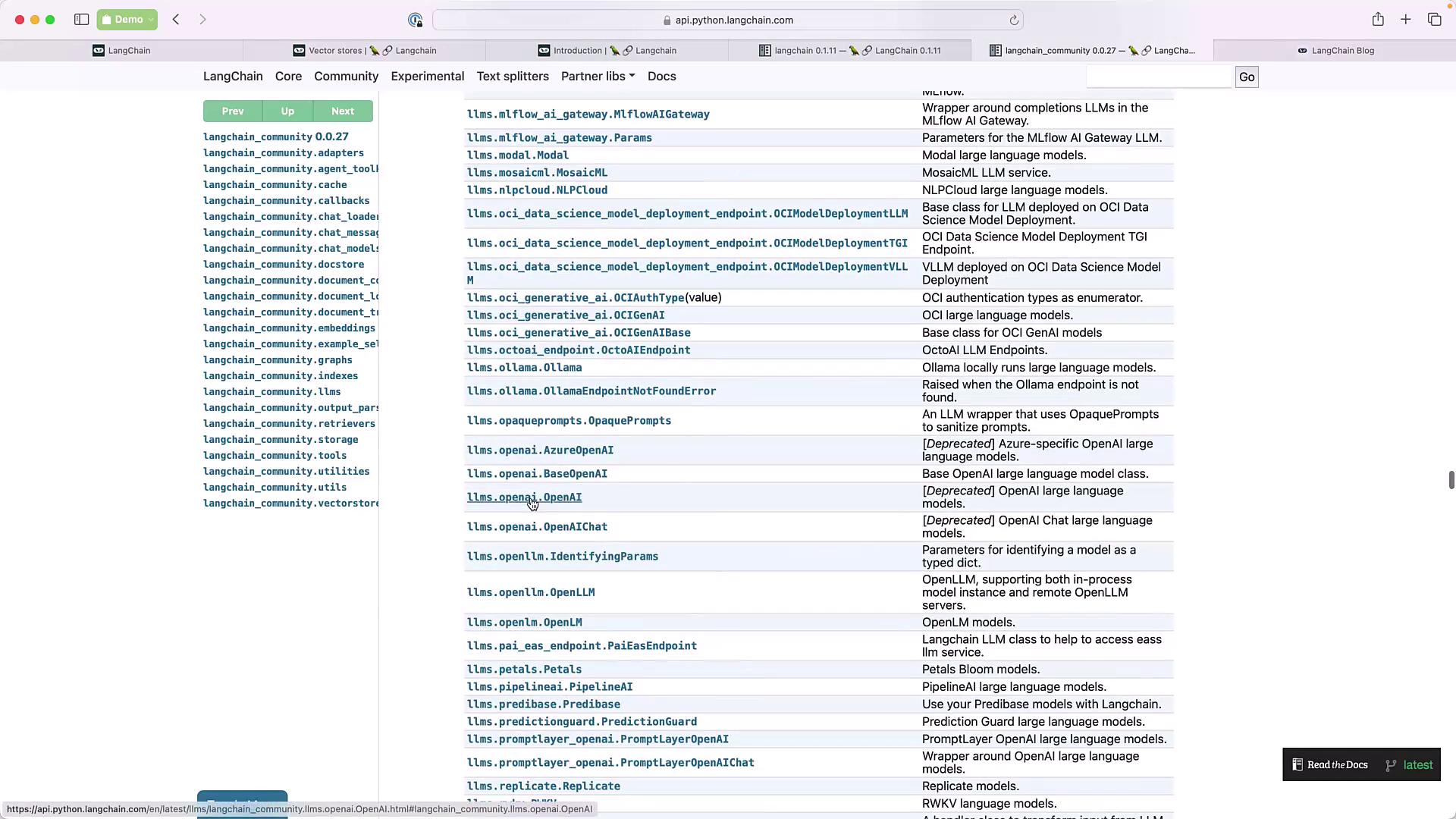

Community LLMs and Modules

Community contributions extend core functionality. Browse community LLM implementations and modules:

Code Examples

Initializing an OpenAI Chat Model

Creating an LLMChain

Exploring Chains

Discover all chain implementations in the API reference: