What Is a Load Balancer?

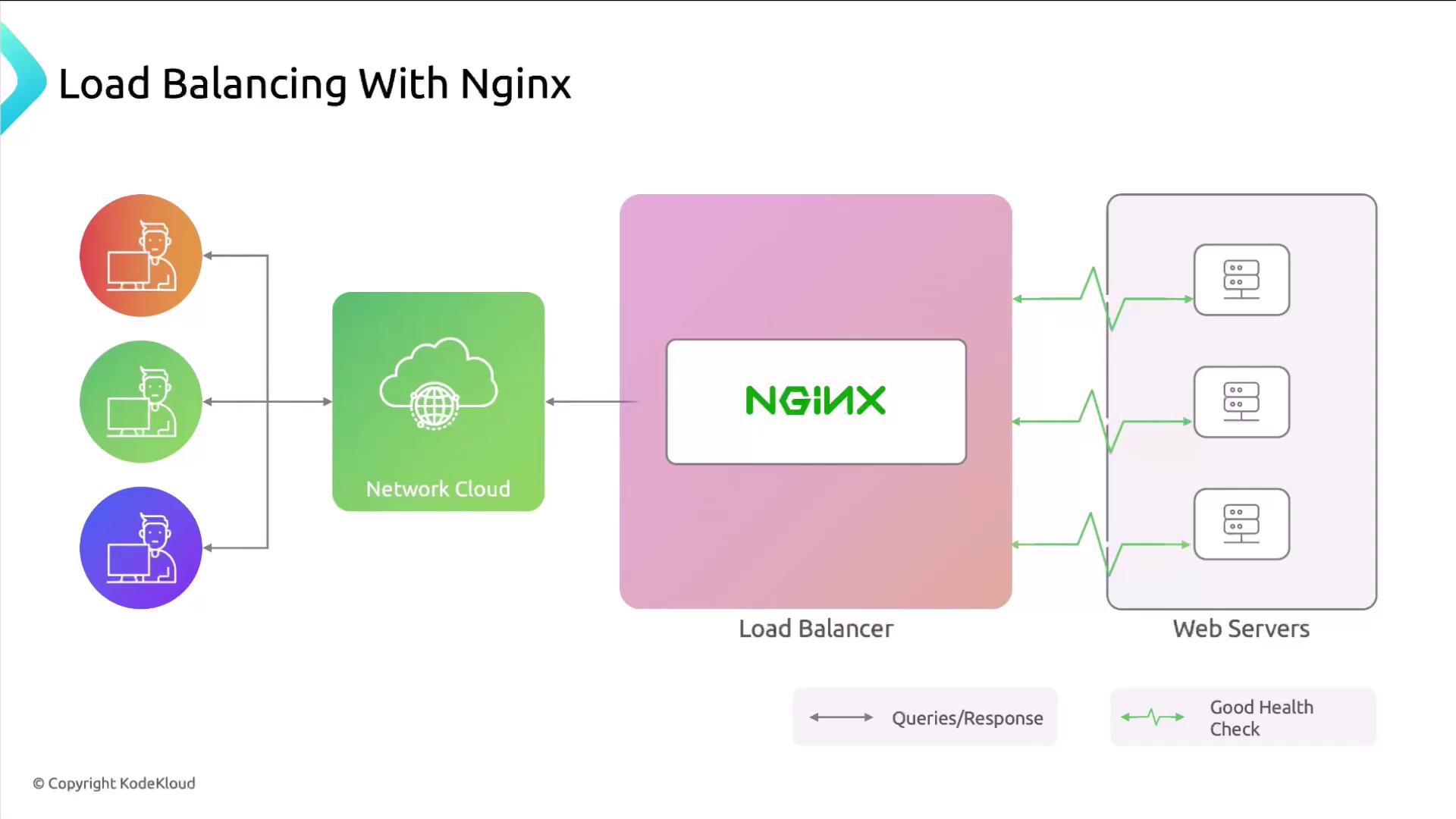

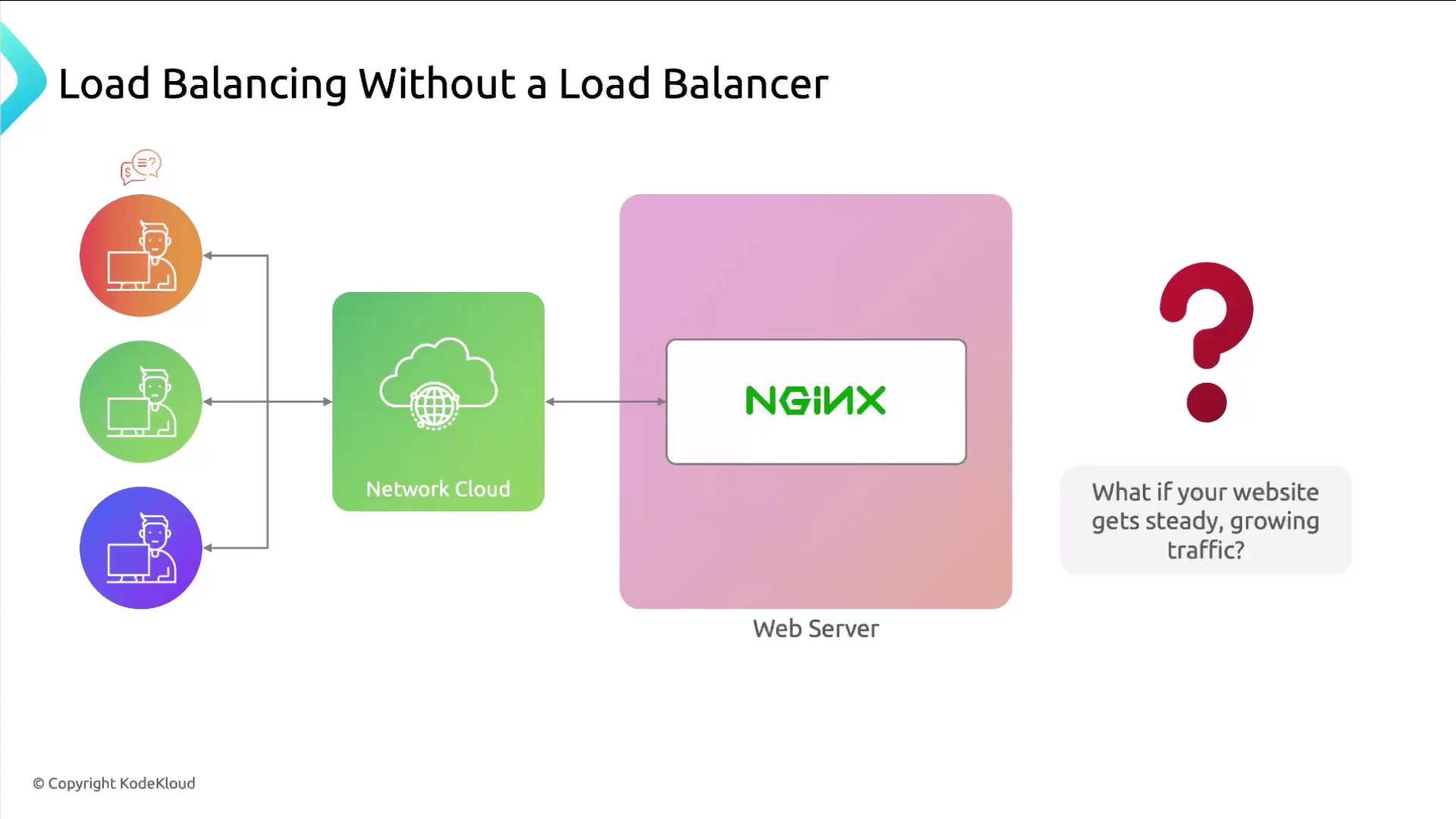

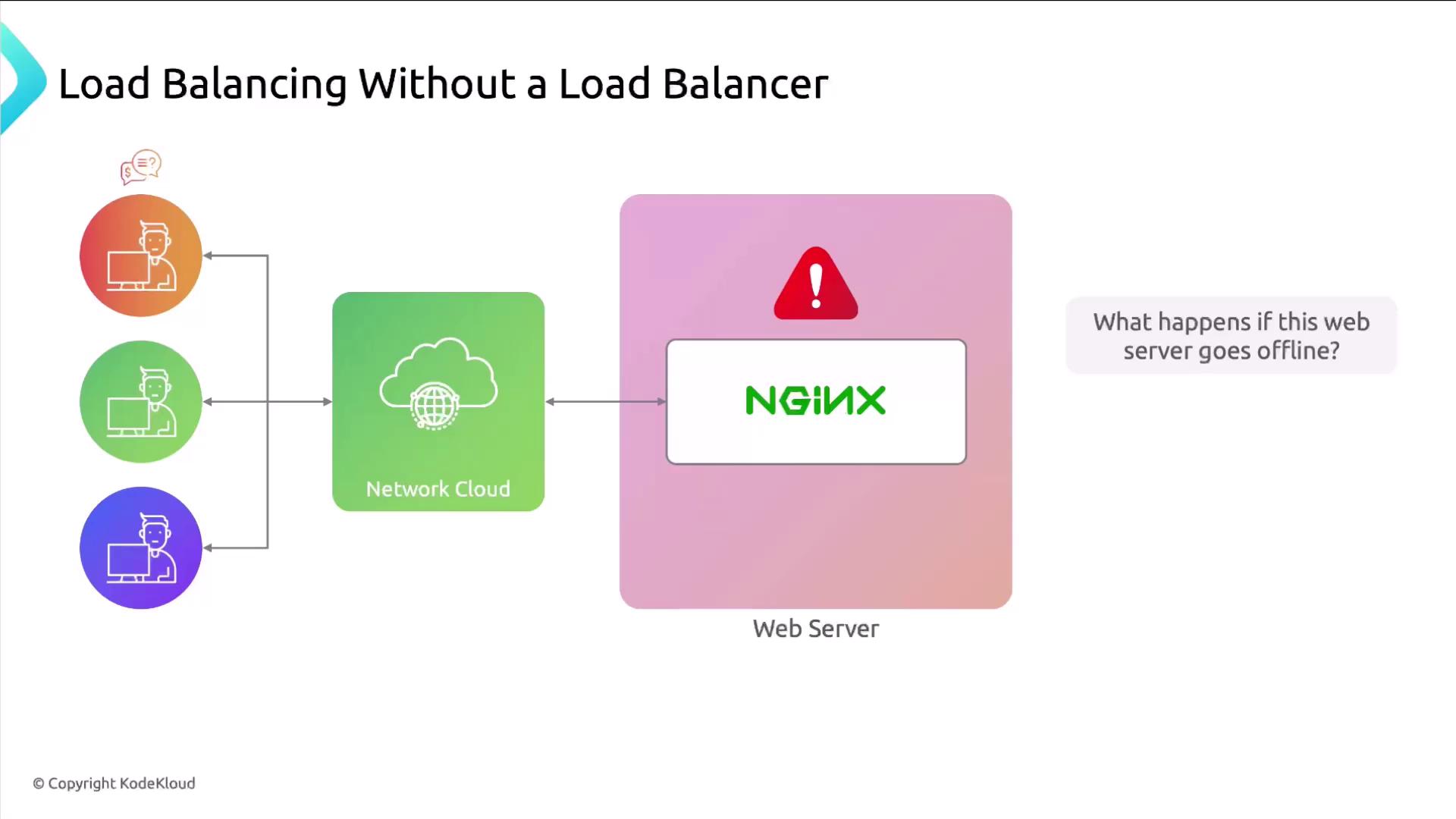

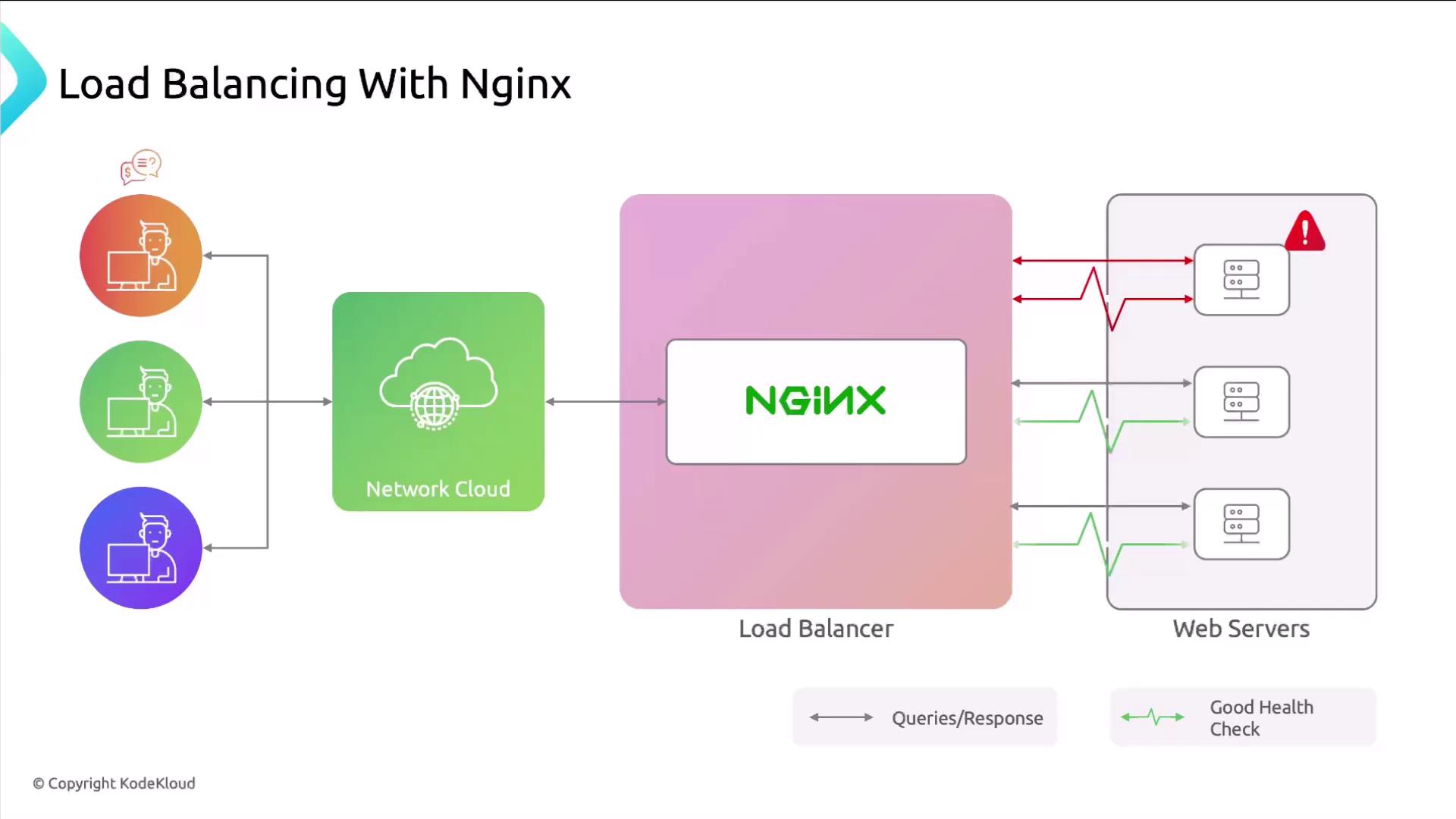

A load balancer is a network device—software or hardware—that distributes incoming traffic across multiple backend servers. It prevents any single server from becoming a performance bottleneck or single point of failure.

Why Use Nginx as a Load Balancer?

Nginx not only distributes traffic but also performs health checks on backend servers. When a node fails, Nginx automatically marks it unhealthy and stops sending traffic its way, keeping your site available on remaining nodes.

By default, Nginx excludes failed servers from the pool. You can tune probe intervals, timeouts, and retry counts for advanced health checks.

Configuring Upstream Pools

Upstream blocks group your backend servers into a single logical name. Later, you reference that name withproxy_pass in a server block.

Load Balancing Methods

Nginx supports multiple algorithms to suit different workloads. Here’s a quick summary:| Algorithm | Use Case | Directive |

|---|---|---|

| Round Robin | Even distribution (default) | — |

| Weighted Round Robin | Prioritize higher-capacity servers | weight= |

| IP Hash | Sticky sessions based on client IP | ip_hash |

| Least Connections | Send to server with fewest active conns | least_conn |

| Least Time* | Fastest response (NGINX Plus only) | least_time last_byte/header |

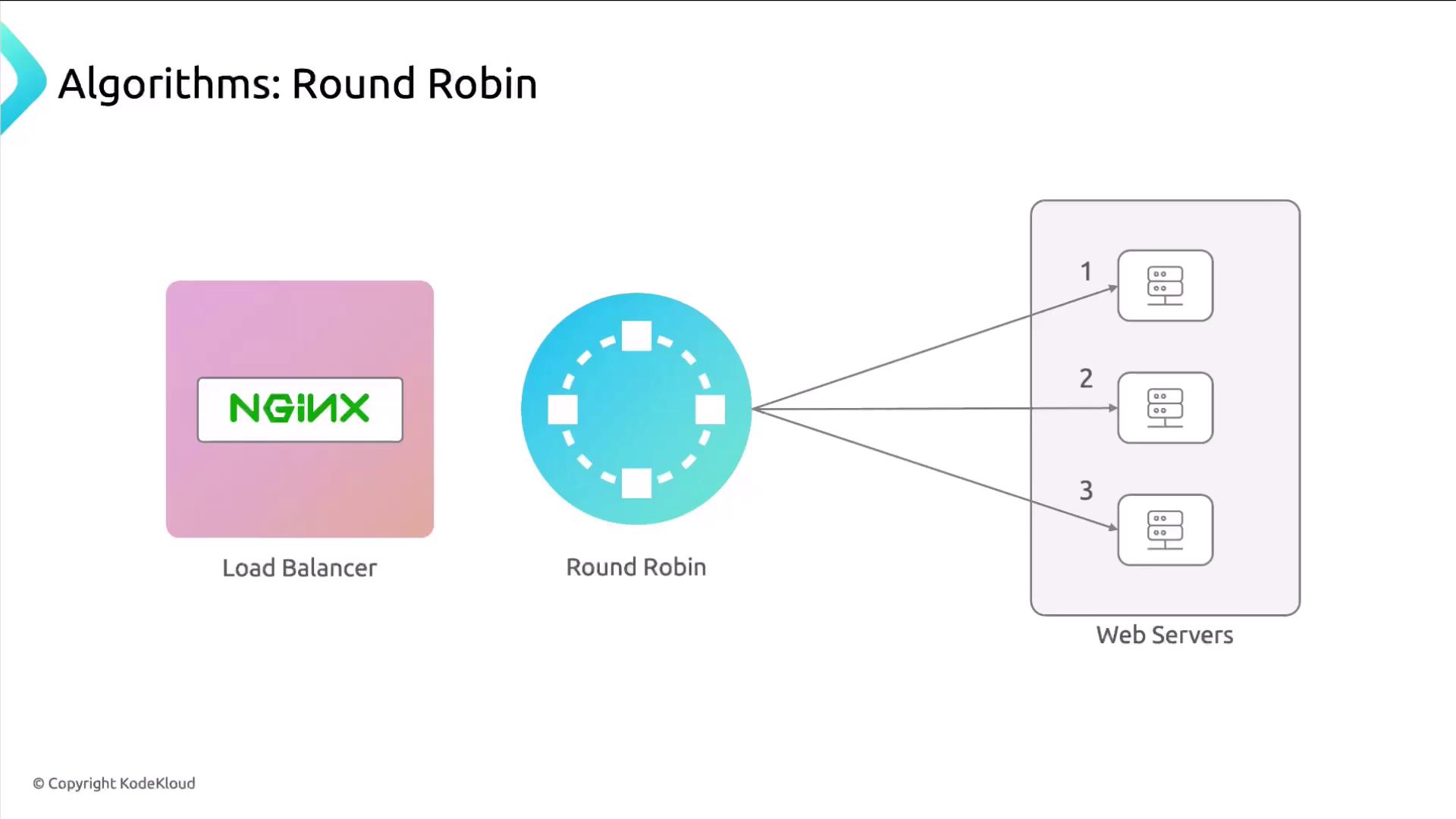

1. Round-Robin (Default)

Distributes requests evenly in a circular order.

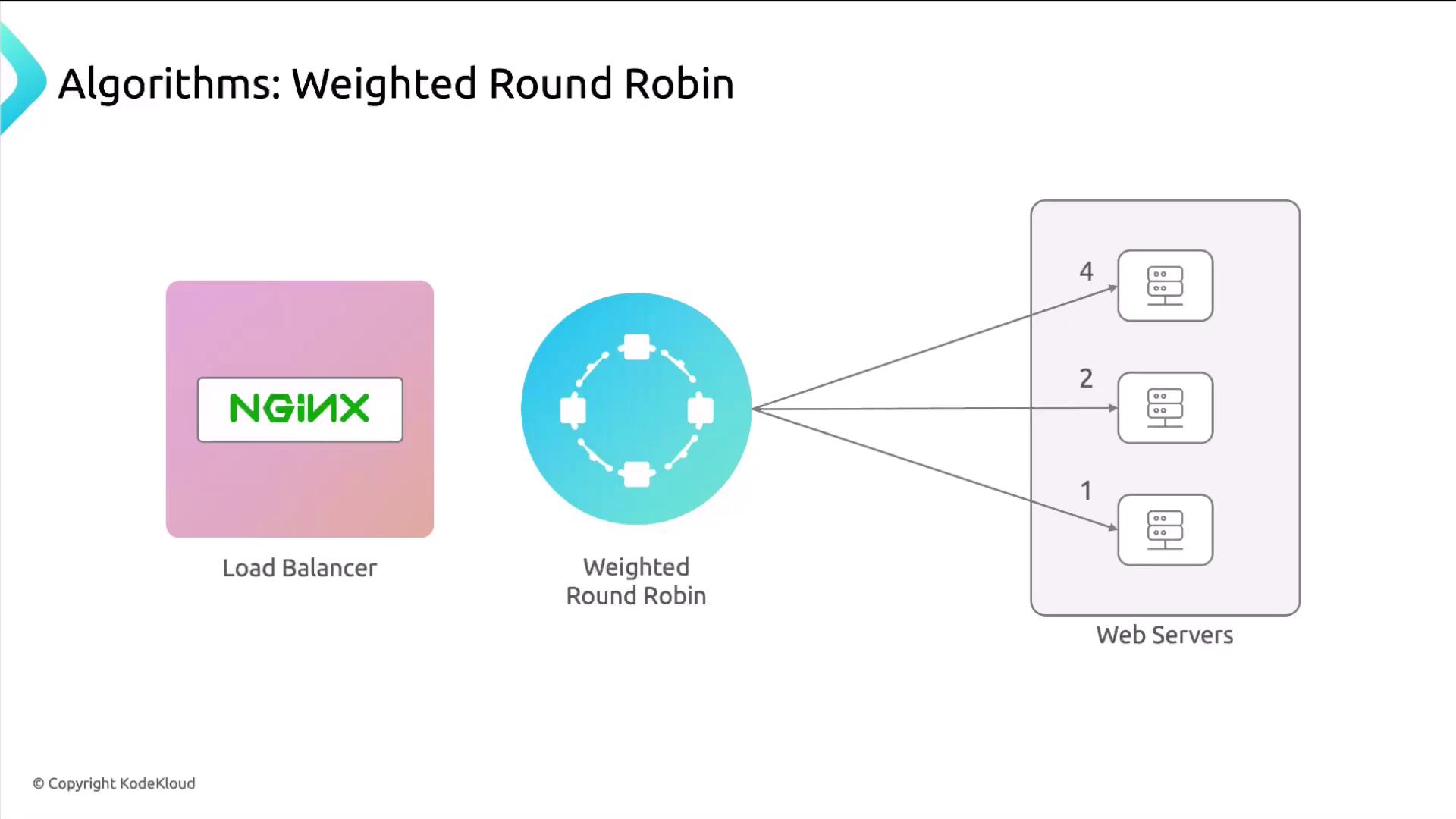

2. Weighted Round-Robin

Assign heavier weights to more powerful servers so they receive a larger share of traffic.

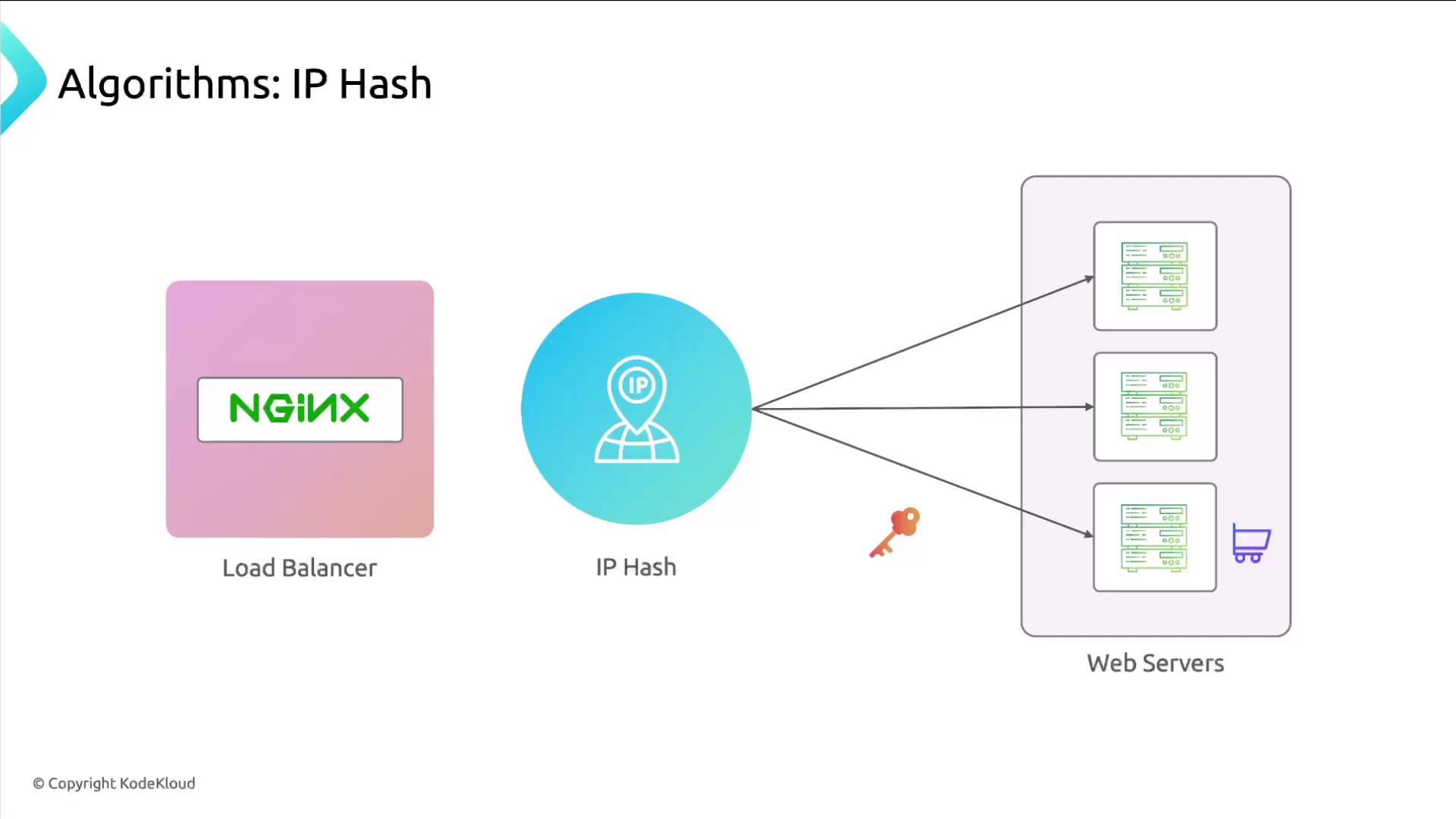

3. IP Hash (Sticky Sessions)

Ensures the same client IP always hits the same server—ideal for session persistence when data is stored in memory on each backend.

Sticky sessions can lead to uneven load if some clients generate more traffic. Use only when backend-level session sharing isn’t an option.

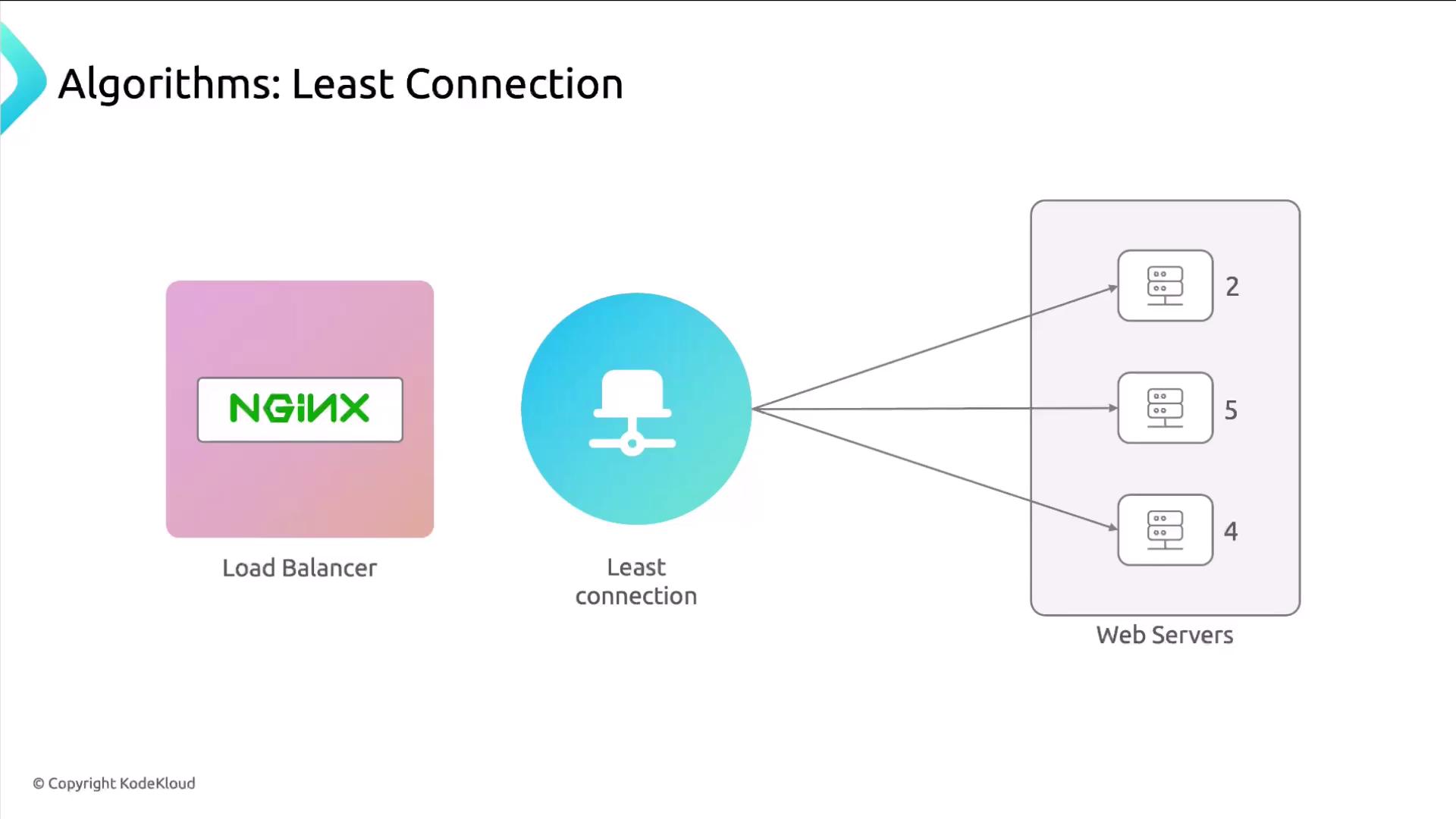

4. Least Connections

Routes each new request to the server with the fewest active connections—ideal for dynamic workloads.