Before diving into autoscaling in Kubernetes, it’s beneficial to understand the basic concepts of scaling using traditional physical servers.

Traditional Scaling Concepts

Historically, applications were deployed on physical servers with fixed CPU and memory capacities. When demand increased and resources were exhausted, the only option was to perform vertical scaling. This involved:- Shutting down the application.

- Upgrading the CPU or memory.

- Restarting the server.

Key Points:

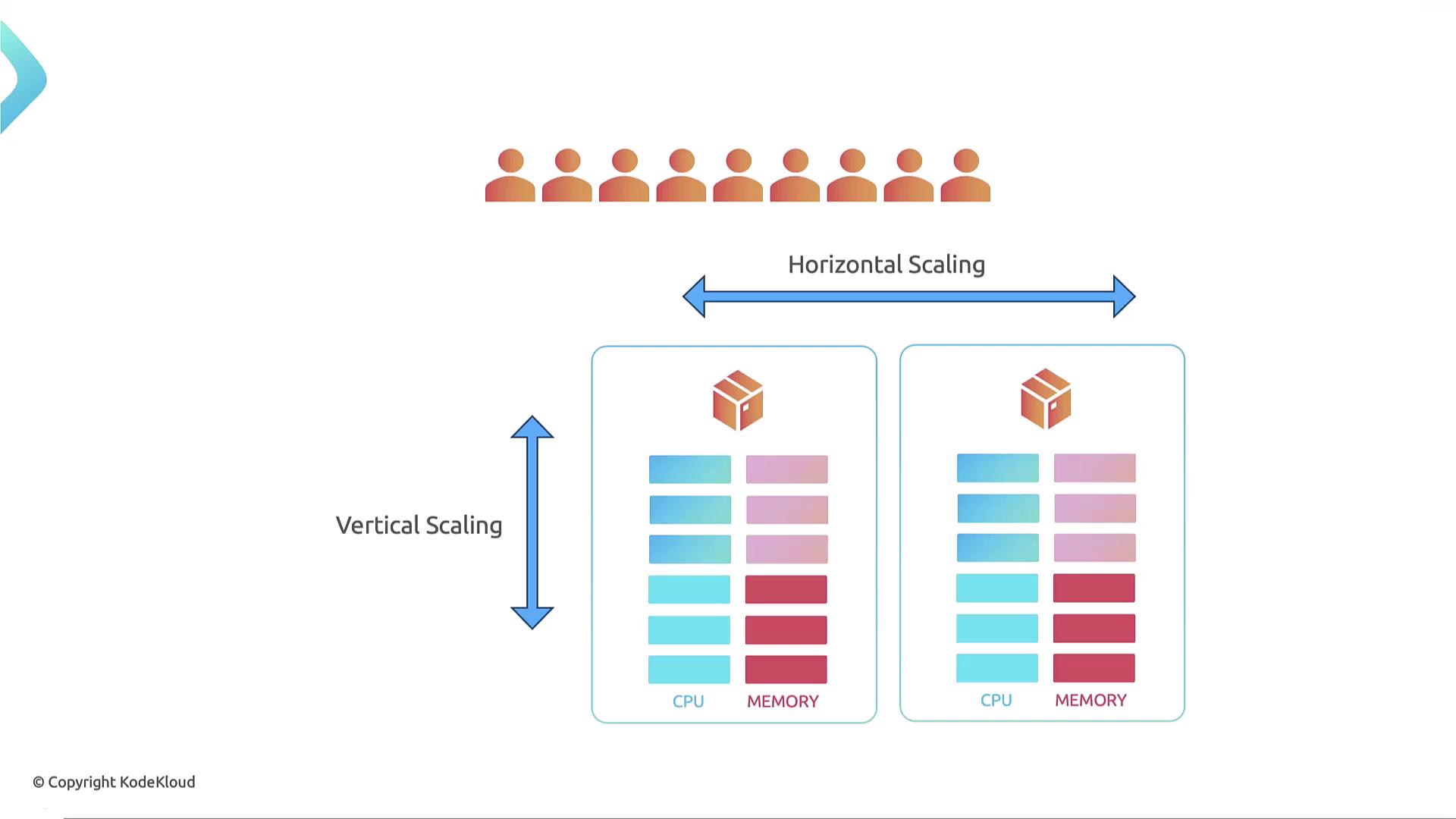

- Vertical Scaling: Increases resources (CPU, memory) of an existing server.

- Horizontal Scaling: Increases server count by adding more instances.

Scaling in Kubernetes

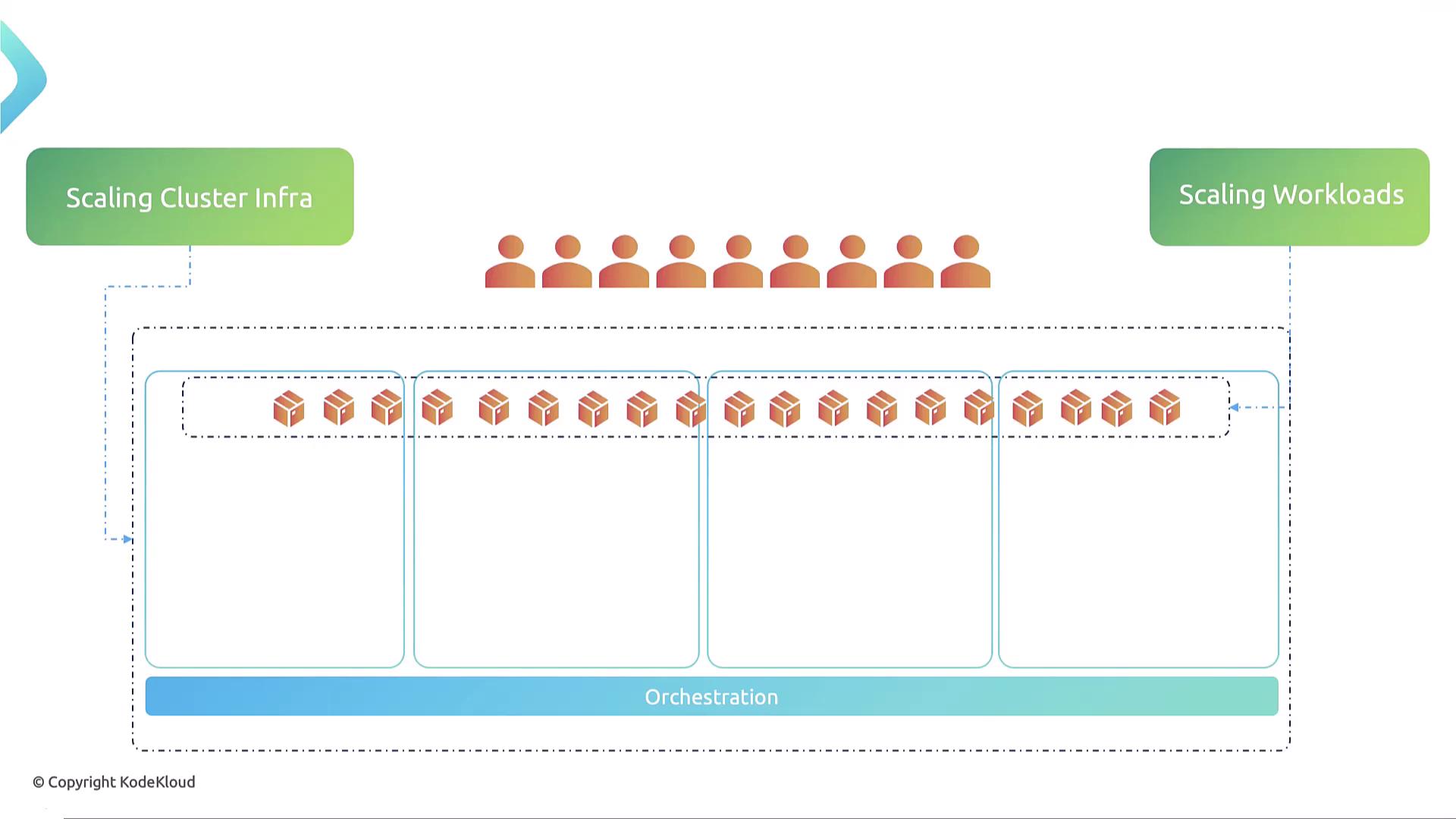

Kubernetes is specifically designed for hosting containerized applications and incorporates scaling based on current demands. It supports two main scaling types:- Workload Scaling: Adjusting the number of containers or Pods running in the cluster.

- Cluster (Infrastructure) Scaling: Adding or removing nodes (servers) from the cluster.

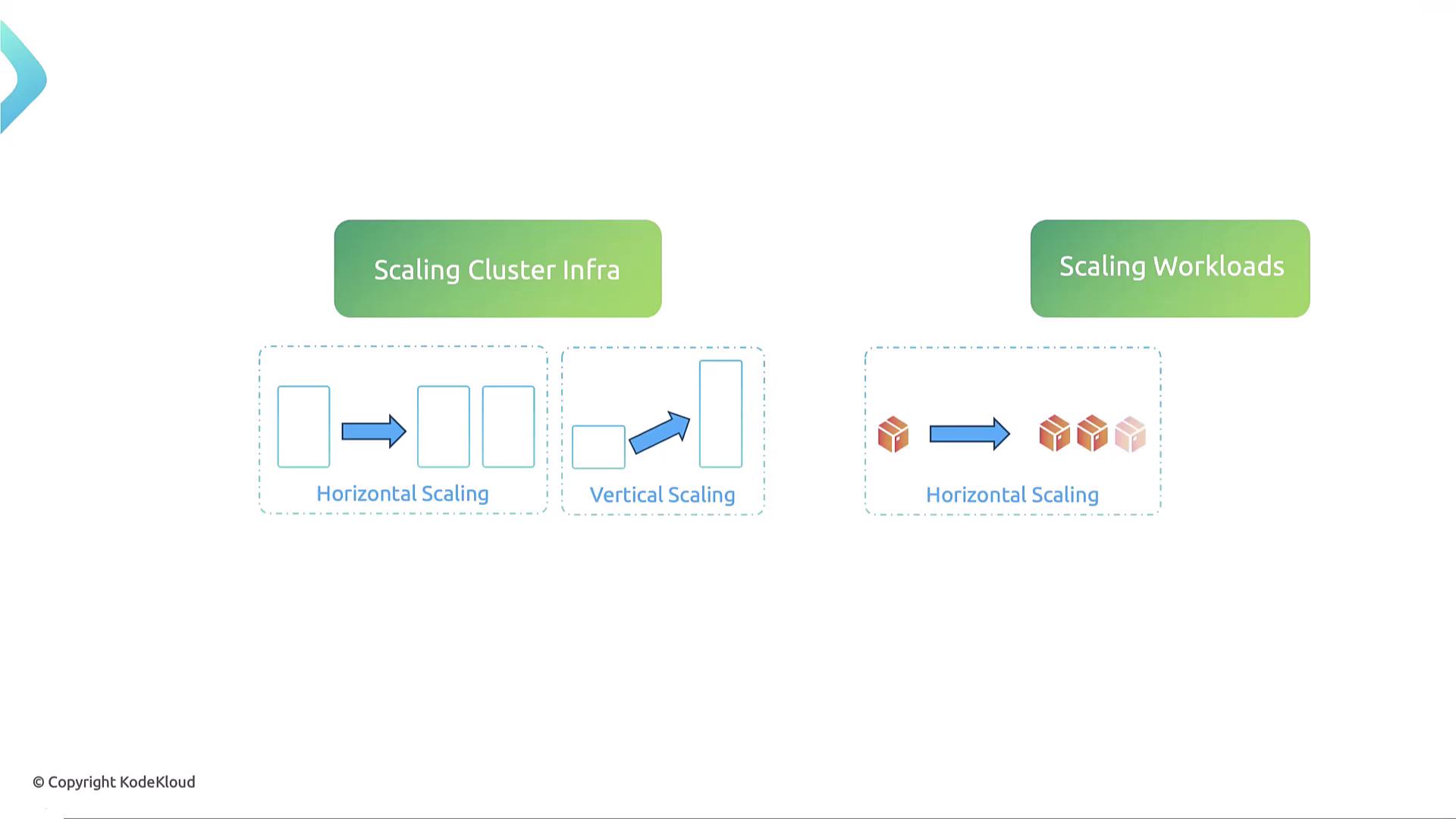

- Cluster Infrastructure Scaling:

- Horizontal Scaling: Add more nodes.

- Vertical Scaling: Enhance the resources (CPU, memory) of existing nodes.

- Workload Scaling:

- Horizontal Scaling: Create additional Pods.

- Vertical Scaling: Modify the resource limits and requests for existing Pods.

Approaches to Scaling in Kubernetes

Kubernetes supports both manual and automated scaling methods.Manual Scaling

Manual scaling requires intervention from the user:-

Infrastructure Scaling (Horizontal): Provision new nodes and join them to the cluster using:

-

Workload Scaling (Horizontal): Adjust the number of Pods manually with:

-

Pod Resource Adjustment (Vertical): Edit the deployment, stateful set, or replica set to modify resource limits and requests:

Automated Scaling

Automation in Kubernetes simplifies scaling and ensures efficient resource management:- Kubernetes Cluster Autoscaler: Automatically adjusts the number of nodes in the cluster by adding or removing nodes when needed.

- Horizontal Pod Autoscaler (HPA): Monitors metrics and adjusts the number of Pods dynamically.

- Vertical Pod Autoscaler (VPA): Automatically changes resource allocations for running Pods based on observed usage.

Automated scaling mechanisms in Kubernetes allow your applications and infrastructure to adapt quickly to changing loads, reducing manual effort and ensuring optimal performance.