Docker Networking Modes

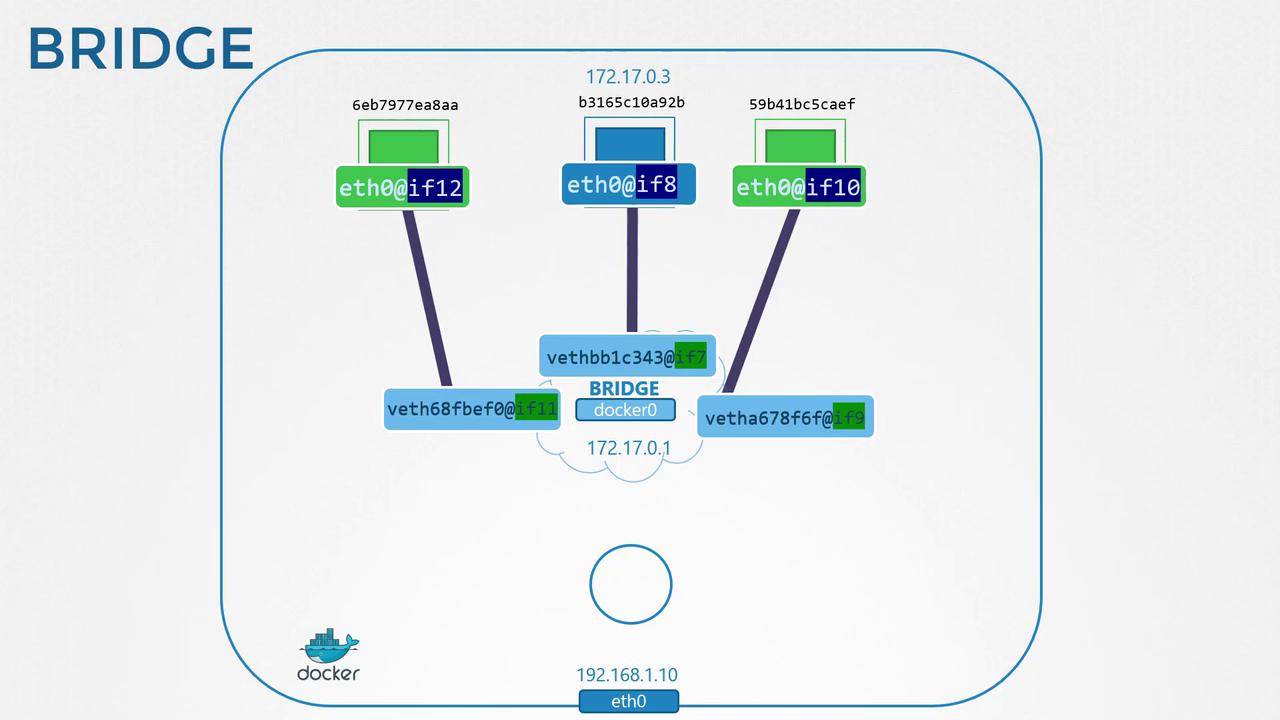

Docker offers several network modes on a single host (e.g., host IP192.168.1.10 on eth0):

| Mode | Behavior | Example |

|---|---|---|

| none | No network interfaces except loopback | docker run --network none nginx |

| host | Shares the host’s network stack directly | docker run --network host nginx |

| bridge | Default: containers attach to the docker0 bridge | docker run nginx |

The container only has a loopback interface and cannot send or receive external traffic. host

Containers share the host network namespace directly.

Using

--network host removes network isolation. Ports in the container map directly to the host and may conflict with other services.The default mode creates a

docker0 bridge with a 172.17.0.0/16 subnet. Containers receive an IP on this network.

List Docker networks and host interfaces:

docker0:

Docker and Network Namespaces

Each container runs in its own Linux network namespace. To view Docker namespaces on the host:- One end attaches to the host bridge (

docker0). - The other end goes inside the container namespace as

eth0.

172.17.0.0/16.

Container-to-Container and Host Communication

Containers on the same bridge can communicate by IP. The host also reaches them directly:External clients cannot access container IPs on the bridge network without port publishing.

Publishing Ports (Port Mapping)

Expose container ports to external clients with-p hostPort:containerPort:

http://192.168.1.10:8080: